Are Tech Companies Responsible When Algorithms Radicalize Mass Shooters?

Table of Contents

The Role of Social Media Algorithms in Echo Chambers and Radicalization

Social media algorithms, designed to keep users engaged, can inadvertently become powerful tools for radicalization. This happens through several key mechanisms.

Echo Chambers and Filter Bubbles

Algorithms create echo chambers by reinforcing existing beliefs. This happens through:

- Increased exposure to biased content: Algorithms prioritize content aligning with a user's past behavior, leading to a skewed information diet.

- Limited exposure to diverse viewpoints: Contradictory perspectives are often filtered out, creating an insulated environment where extremist views go unchallenged.

- Reinforcement of pre-existing biases: Constant exposure to similar viewpoints strengthens existing biases, making users more susceptible to radicalization.

- Difficulty in discerning credible information: The sheer volume of information, coupled with the lack of clear signals indicating credibility, makes it difficult to identify reliable sources.

Recommendation Systems and Extremist Content

Recommendation systems, designed to suggest relevant content, can inadvertently promote extremist ideologies. This occurs via:

- Personalized content feeds: Algorithms tailor content based on individual preferences, potentially leading users down a rabbit hole of increasingly extreme material.

- Targeted advertising of extremist groups: Extremist groups can use targeted advertising to reach susceptible individuals, bypassing traditional content moderation efforts.

- Algorithm-driven content suggestions: "Related videos" or "suggested posts" can lead users from relatively innocuous content to increasingly radical material.

- Lack of sufficient content moderation: The scale of online content makes it incredibly difficult to monitor and remove extremist material effectively.

The Spread of Misinformation and Conspiracy Theories

Algorithms significantly accelerate the spread of misinformation and conspiracy theories, which can fuel violence. This is facilitated by:

- Viral spread of false narratives: Algorithms amplify engaging content, regardless of its truthfulness, leading to the rapid dissemination of harmful narratives.

- Lack of fact-checking mechanisms: Many platforms lack robust fact-checking mechanisms, allowing false information to proliferate unchecked.

- Amplification of harmful narratives: Algorithms can amplify even relatively minor instances of harmful content, giving them disproportionate reach and influence.

- Difficulty in identifying and combating misinformation: The dynamic and ever-evolving nature of online information makes it difficult to effectively track and counter misinformation campaigns.

Legal and Ethical Responsibilities of Tech Companies

The legal and ethical landscape surrounding algorithm-driven radicalization is complex and evolving.

Section 230 and its Limitations

Section 230 of the Communications Decency Act provides significant legal protection to tech companies from liability for user-generated content. However, its applicability in cases of algorithm-driven radicalization is intensely debated:

- Protection from liability for user-generated content: Section 230 shields platforms from being held responsible for content created by their users.

- Arguments for and against reforming Section 230: Reform advocates argue that Section 230 allows platforms to escape responsibility for harmful content facilitated by their algorithms. Opponents fear that reform could stifle free speech.

- Balancing free speech with responsibility: Striking a balance between protecting free speech and holding tech companies accountable for harmful content remains a major challenge.

Ethical Considerations and Corporate Social Responsibility

Beyond legal obligations, tech companies have ethical responsibilities to prevent the misuse of their platforms:

- Prioritizing user safety: Protecting users from harm should be a paramount concern for tech companies.

- Implementing robust content moderation strategies: This involves investing in both human and AI-driven moderation systems to identify and remove extremist content.

- Investing in AI-driven detection of extremist content: Sophisticated AI tools can help identify subtle signs of radicalization that might be missed by human moderators.

- Promoting media literacy: Empowering users to critically evaluate online information is crucial in combating misinformation and extremist ideologies.

The Difficulty of Content Moderation at Scale

Moderating vast amounts of content is a monumental task, presenting significant challenges:

- Human resource limitations: The sheer volume of content requires an immense workforce, which is costly and difficult to manage.

- Technological limitations: Even the most sophisticated AI systems struggle to identify subtle signs of radicalization and extremist content reliably.

- The "arms race" between extremists and content moderators: Extremists constantly adapt their tactics to evade detection, creating an ongoing challenge for content moderators.

- Challenges in defining and identifying extremist content: Determining what constitutes "extremist" content is often subjective and context-dependent.

The Complex Interplay of Factors Contributing to Violence

Algorithm radicalization is only one piece of a much larger puzzle.

Beyond Algorithms: Mental Health and Societal Factors

Many other factors contribute to violence, including:

- Mental health issues: Mental health problems are often cited as a contributing factor in mass shootings.

- Social inequality: Socioeconomic disparities and feelings of marginalization can fuel resentment and extremism.

- Political polarization: Increasingly divisive political climates can create an environment conducive to radicalization.

- The importance of holistic approaches to violence prevention: Addressing the complex root causes of violence requires a multi-faceted approach.

- The need for collaboration between tech companies, mental health professionals, and policymakers: Effective solutions require collaboration across various sectors.

The Need for a Multifaceted Approach

Addressing algorithm-driven radicalization requires a multi-pronged strategy involving:

- Improved content moderation policies: Tech companies need to invest in more effective and nuanced content moderation strategies.

- Enhanced media literacy education: Empowering individuals to critically evaluate online information is crucial.

- Strengthening mental health services: Improving access to mental healthcare is essential in addressing underlying factors that contribute to violence.

- Fostering more inclusive and tolerant online communities: Creating online spaces that promote dialogue and understanding is vital in countering extremism.

Conclusion

This article has explored the intricate relationship between algorithms, radicalization, and mass shootings. While tech companies bear a significant responsibility in preventing the misuse of their platforms, it’s crucial to acknowledge the multifaceted nature of this complex issue. Addressing algorithm radicalization requires a collaborative effort from various stakeholders, including policymakers, mental health professionals, and educational institutions.

We need a national conversation about the role of algorithms in radicalization. Let's demand greater transparency and accountability from tech companies regarding their algorithms and their impact on society. We must hold them responsible for preventing the use of their platforms to fuel violence and explore solutions to mitigate the risks of algorithm radicalization mass shooters.

Featured Posts

-

Alcaraz Claims Monte Carlo Masters Title Despite Tough Competition

May 30, 2025

Alcaraz Claims Monte Carlo Masters Title Despite Tough Competition

May 30, 2025 -

Daniel Cormiers Heated Confrontation His Message To Jon Jones Team

May 30, 2025

Daniel Cormiers Heated Confrontation His Message To Jon Jones Team

May 30, 2025 -

Review Kawasaki Versys X 250 2025 Warna Baru Performa Tangguh

May 30, 2025

Review Kawasaki Versys X 250 2025 Warna Baru Performa Tangguh

May 30, 2025 -

Delving Into History The Baim Collections Timeless Treasures

May 30, 2025

Delving Into History The Baim Collections Timeless Treasures

May 30, 2025 -

Kawasaki Vulcan S 2025 Cruiser Macho Futuristik Meluncur Di Indonesia

May 30, 2025

Kawasaki Vulcan S 2025 Cruiser Macho Futuristik Meluncur Di Indonesia

May 30, 2025

Latest Posts

-

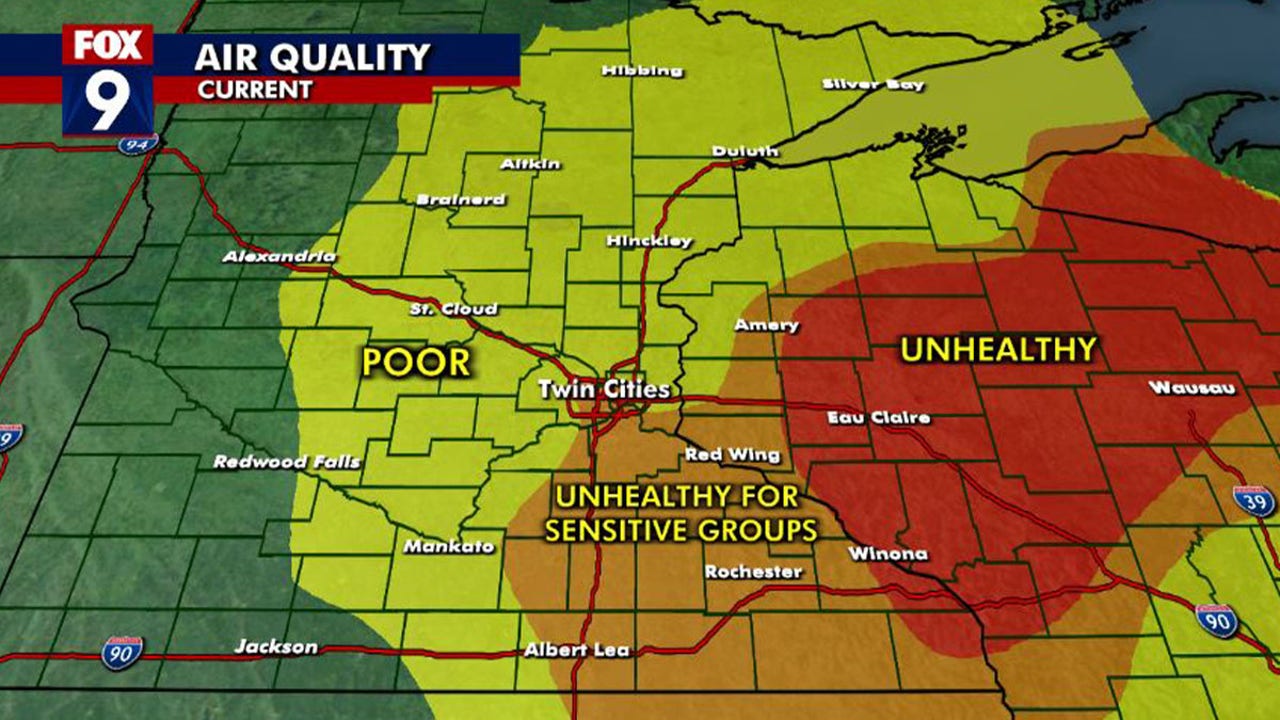

Health Impacts Of Canadian Wildfire Smoke On Minnesota

May 31, 2025

Health Impacts Of Canadian Wildfire Smoke On Minnesota

May 31, 2025 -

Dangerous Air Quality In Minnesota Due To Canadian Wildfires

May 31, 2025

Dangerous Air Quality In Minnesota Due To Canadian Wildfires

May 31, 2025 -

Canadian Wildfires Cause Dangerous Air In Minnesota

May 31, 2025

Canadian Wildfires Cause Dangerous Air In Minnesota

May 31, 2025 -

Eastern Manitoba Wildfires Rage Crews Struggle For Control

May 31, 2025

Eastern Manitoba Wildfires Rage Crews Struggle For Control

May 31, 2025 -

Homes Lost Lives Disrupted The Newfoundland Wildfire Crisis

May 31, 2025

Homes Lost Lives Disrupted The Newfoundland Wildfire Crisis

May 31, 2025