Exploring The Boundaries Of AI Learning: Guiding Principles For Responsible AI

Table of Contents

Bias in AI Algorithms and Datasets

The pervasive problem of bias in AI systems is a significant hurdle to responsible AI development. This bias often stems from the data used to train these algorithms. If the training data reflects existing societal biases – whether related to gender, race, socioeconomic status, or other factors – the resulting AI system will likely perpetuate and even amplify those biases. This can lead to unfair or discriminatory outcomes, undermining trust and fairness.

Mitigating Algorithmic Bias

Addressing algorithmic bias requires a multi-pronged approach. Techniques like data augmentation, where synthetic data is generated to balance underrepresented groups, can help improve data representation. Furthermore, incorporating algorithmic fairness constraints during the model development process can actively mitigate bias. Careful dataset curation, involving rigorous scrutiny and pre-processing of data to identify and remove biased elements, is crucial.

- Examples of bias: Gender bias in facial recognition software, racial bias in loan application algorithms, socioeconomic bias in predictive policing models.

- Methods for bias detection: Statistical analysis of model outputs, fairness metrics (e.g., disparate impact, equal opportunity), and human review of model decisions.

- Importance of diverse development teams: Diverse teams bring varied perspectives and help identify and mitigate potential biases embedded in algorithms and data.

Ensuring Data Representativeness

Creating truly unbiased AI systems necessitates the use of diverse and representative datasets. This means actively seeking out and incorporating data from underrepresented groups to ensure that the AI system is trained on a fair and accurate reflection of the real world.

- Strategies for obtaining representative data: Collaborating with diverse communities, using publicly available datasets with comprehensive demographic information, and designing data collection methods that specifically target underrepresented groups.

- Addressing data scarcity in underrepresented groups: Employing data augmentation techniques, synthetic data generation, and federated learning to address data imbalances.

- Ethical implications of data collection: Ensuring informed consent, data anonymization, and data security are paramount to ethical data collection practices.

Transparency and Explainability in AI

Understanding how AI systems arrive at their decisions is crucial for building trust and accountability. Explainable AI (XAI) focuses on developing AI models whose decision-making processes are transparent and understandable.

The "Black Box" Problem

Many complex AI models, particularly deep learning systems, are often described as "black boxes." Their internal workings are so intricate that it's difficult, if not impossible, to understand precisely how they arrive at a particular output.

- Examples of "black box" AI models: Deep neural networks, complex ensemble methods.

- Limitations of relying on opaque systems: Difficulty in debugging errors, lack of trust from users, inability to ensure fairness and accountability.

- Risks associated with lack of transparency: Potential for unintended consequences, difficulty in identifying and mitigating bias, and reduced ability to address errors or malfunctions.

Promoting Explainable AI

Several techniques are being developed to enhance the transparency of AI systems. These include methods like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations), which provide insights into the factors influencing model predictions. Visualization tools can also play a vital role in making AI decision-making processes more understandable.

- Examples of explainable AI methods: LIME, SHAP, decision trees, rule-based systems.

- Role of visualization in enhancing understanding: Visual representations of model predictions and their contributing factors can significantly improve transparency and comprehension.

- Benefits of explainability for trust and accountability: Increased user trust, easier identification and mitigation of bias, and enhanced ability to hold developers and deployers accountable.

Privacy and Security in AI Systems

The development and deployment of AI systems often involve the collection, processing, and storage of vast amounts of data, much of it sensitive personal information. Protecting data privacy and security is therefore a paramount concern.

Protecting Sensitive Data

Robust data protection mechanisms are crucial to ensure the responsible use of data in AI. Techniques like data anonymization, which removes identifying information from datasets, and differential privacy, which adds noise to data to protect individual privacy while preserving aggregate statistical properties, can help safeguard sensitive information.

- Relevant data privacy regulations: GDPR (General Data Protection Regulation), CCPA (California Consumer Privacy Act).

- Importance of data encryption and access control: Protecting data from unauthorized access through encryption and implementing strict access control measures.

- Ethical implications of data breaches: Potential for identity theft, reputational damage, and legal repercussions.

Securing AI Systems from Attacks

AI systems are not immune to attacks. Adversarial attacks, where malicious actors manipulate input data to mislead the AI system, pose a significant security threat. Robust security measures are crucial to protect AI systems from such attacks and ensure their reliable operation.

- Types of AI security threats: Adversarial attacks, data poisoning, model theft, and inference attacks.

- Methods for securing AI models: Robust model training, input validation, anomaly detection, and continuous security monitoring.

- Importance of ongoing security audits: Regular security assessments to identify and mitigate potential vulnerabilities.

Accountability and Responsibility in AI

When AI systems make mistakes or cause harm, it's crucial to establish clear lines of responsibility. This requires careful consideration of the legal and ethical frameworks governing AI development and deployment.

Establishing Clear Lines of Responsibility

Determining who is responsible – developers, deployers, users, or a combination thereof – in AI-related incidents is a complex challenge. Clear guidelines and regulations are needed to assign accountability in a fair and effective manner.

- The role of developers, deployers, and users: Developers are responsible for the design and development of the AI system, while deployers are responsible for its deployment and operation. Users have a responsibility to use the AI system ethically and responsibly.

- Challenges of assigning blame in complex systems: Difficulties in tracing errors back to their source, especially in highly complex systems.

- Need for clear guidelines and regulations: Establishing clear legal and ethical frameworks for assigning accountability in AI-related incidents.

Mechanisms for Redress

It's essential to establish mechanisms for addressing harms caused by AI systems and ensuring that victims have recourse. This includes procedures for filing complaints, investigating incidents, and providing appropriate remedies.

- Dispute resolution mechanisms: Establishing independent bodies to investigate AI-related incidents and mediate disputes.

- Compensation for damages: Providing compensation to individuals harmed by AI systems.

- Importance of establishing ethical review boards: Creating independent bodies to review AI systems before deployment and provide ethical oversight.

Conclusion

Responsible development and deployment of AI requires careful consideration of ethical implications and the potential risks associated with biased algorithms, lack of transparency, privacy violations, and accountability gaps. By proactively addressing these challenges and adhering to the guiding principles discussed, we can harness the power of AI for good while mitigating its potential harms. Let's work together to explore the boundaries of AI learning responsibly and build a future where AI benefits all of humanity. Continue learning about responsible AI practices and contribute to the development of ethical AI systems. Embrace the future of AI, but do so responsibly.

Featured Posts

-

Nyt Mini Crossword Thursday April 10 Clues And Solutions

May 31, 2025

Nyt Mini Crossword Thursday April 10 Clues And Solutions

May 31, 2025 -

Supporting Manitoba Wildfire Victims Red Cross Aid And How To Donate

May 31, 2025

Supporting Manitoba Wildfire Victims Red Cross Aid And How To Donate

May 31, 2025 -

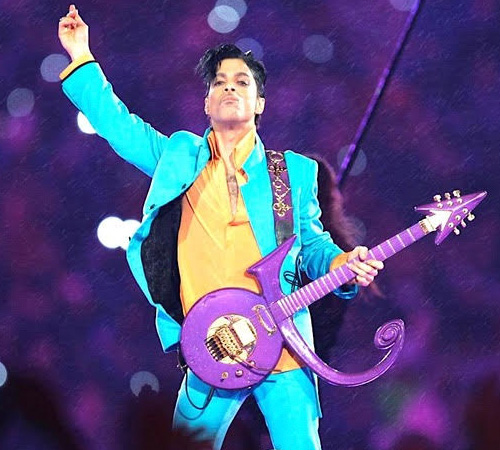

Today In History March 26th The Tragic Death Of Prince And The Fentanyl Report

May 31, 2025

Today In History March 26th The Tragic Death Of Prince And The Fentanyl Report

May 31, 2025 -

Nyt Mini Crossword Answers For Tuesday April 8

May 31, 2025

Nyt Mini Crossword Answers For Tuesday April 8

May 31, 2025 -

3 000 Year Old Mayan Complex Unearthed Pyramids And Canals Revealed

May 31, 2025

3 000 Year Old Mayan Complex Unearthed Pyramids And Canals Revealed

May 31, 2025