FTC Investigates OpenAI's ChatGPT: What This Means For AI Development

Table of Contents

The FTC's Concerns Regarding ChatGPT and AI Safety

The Federal Trade Commission (FTC) is investigating OpenAI's ChatGPT over several serious concerns related to AI safety and consumer protection. These concerns primarily revolve around the potential for harm caused by the technology's capabilities and the lack of adequate safeguards. The FTC's focus appears to be on mitigating the risks associated with this powerful language model.

-

Potential for generating misleading or harmful content: ChatGPT's ability to generate realistic-sounding text raises concerns about its potential misuse for creating deepfakes, spreading misinformation, and generating harmful content, including hate speech and scams. This raises significant questions about the responsibility of developers in preventing such misuse.

-

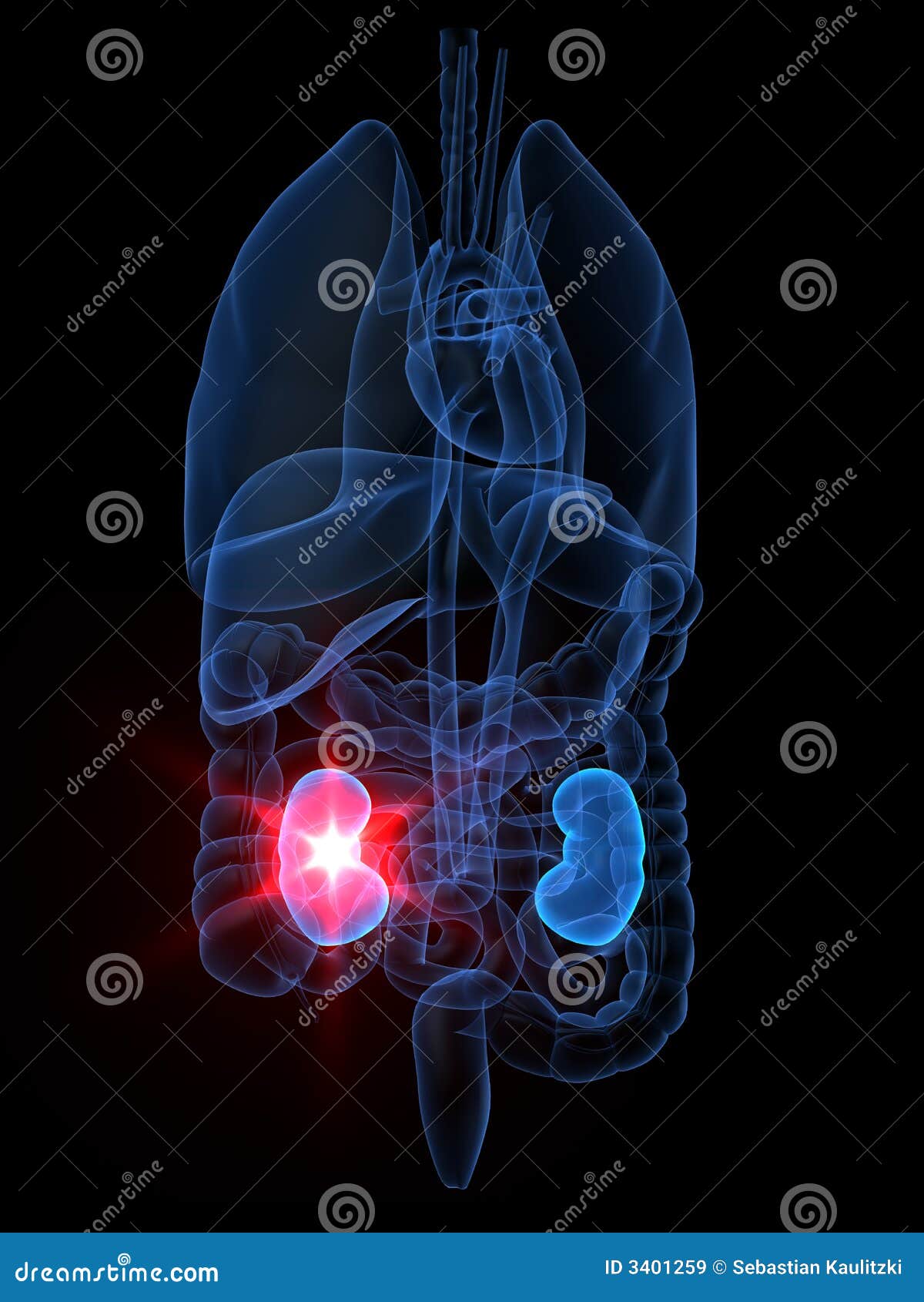

Concerns about data privacy and security in ChatGPT's training data: The vast datasets used to train ChatGPT raise concerns about the privacy and security of personal information potentially included in those datasets. The FTC is likely investigating whether OpenAI has adequately protected user data and complied with relevant privacy regulations.

-

Lack of transparency regarding ChatGPT's algorithms and decision-making processes: The "black box" nature of many AI systems, including ChatGPT, makes it difficult to understand how they arrive at their outputs. This lack of transparency makes it challenging to identify and address biases or errors in the system's functioning. The FTC is pushing for greater accountability and explainability in AI systems.

-

The potential for discriminatory outcomes due to biases in training data: AI systems are trained on data, and if that data reflects existing societal biases, the AI system will likely perpetuate and even amplify those biases. The FTC is investigating whether ChatGPT exhibits discriminatory tendencies and whether OpenAI has taken sufficient steps to mitigate bias in its model.

Implications for OpenAI and the Broader AI Industry

The FTC investigation into OpenAI's ChatGPT will undoubtedly have significant implications for OpenAI itself and the broader AI industry. The outcome of the investigation could set a precedent for future regulation and influence how other AI companies develop and deploy their technologies.

-

Increased regulatory pressure on other AI developers: The FTC's actions send a clear message that AI developers will be held accountable for the societal impacts of their creations. This will likely lead to increased scrutiny and regulatory pressure on other companies working on similar technologies.

-

Potential for increased costs and slower development due to stricter regulations: Compliance with stricter regulations will undoubtedly increase the costs and complexity of AI development. This could potentially slow down innovation, but it may also lead to more responsible and ethical AI development practices.

-

A shift toward more ethical and responsible AI development practices: The investigation will likely encourage the AI industry to prioritize ethical considerations and incorporate robust safety mechanisms into their products. This might involve increased investment in AI safety research and the development of better bias detection and mitigation techniques.

-

Demand for greater transparency and accountability from AI companies: The public is increasingly demanding greater transparency and accountability from AI companies. The FTC investigation reinforces this demand and will likely lead to a push for more open and explainable AI systems.

The Future of AI Regulation and Ethical Considerations

The FTC's investigation underscores the urgent need for a robust regulatory framework for AI. The ongoing debate centers on finding a balance between fostering innovation and mitigating potential harms.

-

The need for robust AI safety standards and testing protocols: Clear standards and rigorous testing protocols are crucial to ensure AI systems are safe and reliable. These standards should cover various aspects, including bias detection, data security, and the potential for misuse.

-

The role of independent audits and ethical review boards: Independent audits and ethical review boards can help to ensure that AI systems are developed and deployed responsibly. These bodies can provide an objective assessment of AI systems and recommend improvements.

-

The challenges of balancing innovation with responsible development: One of the biggest challenges is finding a balance between encouraging innovation and ensuring responsible AI development. Overly restrictive regulations could stifle innovation, while inadequate regulation could lead to significant harms.

-

The importance of public education and engagement in AI policy discussions: Public education and engagement are crucial for shaping responsible AI policy. Informed public debate can help policymakers to make informed decisions and ensure that AI systems are developed in a way that benefits society as a whole.

Best Practices for Responsible AI Development in Light of the FTC Investigation

The FTC investigation offers valuable lessons for AI developers. Prioritizing ethical considerations and implementing robust safety measures are no longer optional but essential for responsible AI development.

-

Implementing rigorous bias detection and mitigation techniques: AI developers must actively identify and mitigate biases in their training data and algorithms to ensure fairness and prevent discriminatory outcomes.

-

Ensuring data privacy and security through robust data governance: Strict data governance practices are essential to protect user privacy and data security. This involves implementing robust security measures and complying with relevant privacy regulations.

-

Providing clear explanations of AI system functionality and limitations: Transparency is crucial to building trust in AI systems. Developers should provide clear explanations of how their systems work and acknowledge their limitations.

-

Seeking independent audits and evaluations of AI systems: Independent audits and evaluations can help identify potential risks and ensure that AI systems are developed and deployed responsibly.

Conclusion: FTC Investigates OpenAI's ChatGPT: Key Takeaways and a Call to Action

The FTC's investigation into OpenAI's ChatGPT marks a significant turning point in the development and regulation of AI. The investigation highlights the crucial need for increased accountability, transparency, and ethical considerations in the design, development, and deployment of powerful AI systems like ChatGPT. The future of AI hinges on proactively addressing the potential risks while fostering innovation. Learn more about the FTC's investigation into OpenAI's ChatGPT and stay updated on the evolving landscape of AI regulation. Join the conversation on responsible AI development and help shape a future where AI benefits all of humanity.

Featured Posts

-

Justin Herbert Chargers Set For Brazil In 2025 Season Opener

Apr 29, 2025

Justin Herbert Chargers Set For Brazil In 2025 Season Opener

Apr 29, 2025 -

Will Trump Pardon Pete Rose A Look At The Years Long Campaign

Apr 29, 2025

Will Trump Pardon Pete Rose A Look At The Years Long Campaign

Apr 29, 2025 -

Blockchain Security Enhanced Chainalysis Integrates Alteryas Ai Capabilities

Apr 29, 2025

Blockchain Security Enhanced Chainalysis Integrates Alteryas Ai Capabilities

Apr 29, 2025 -

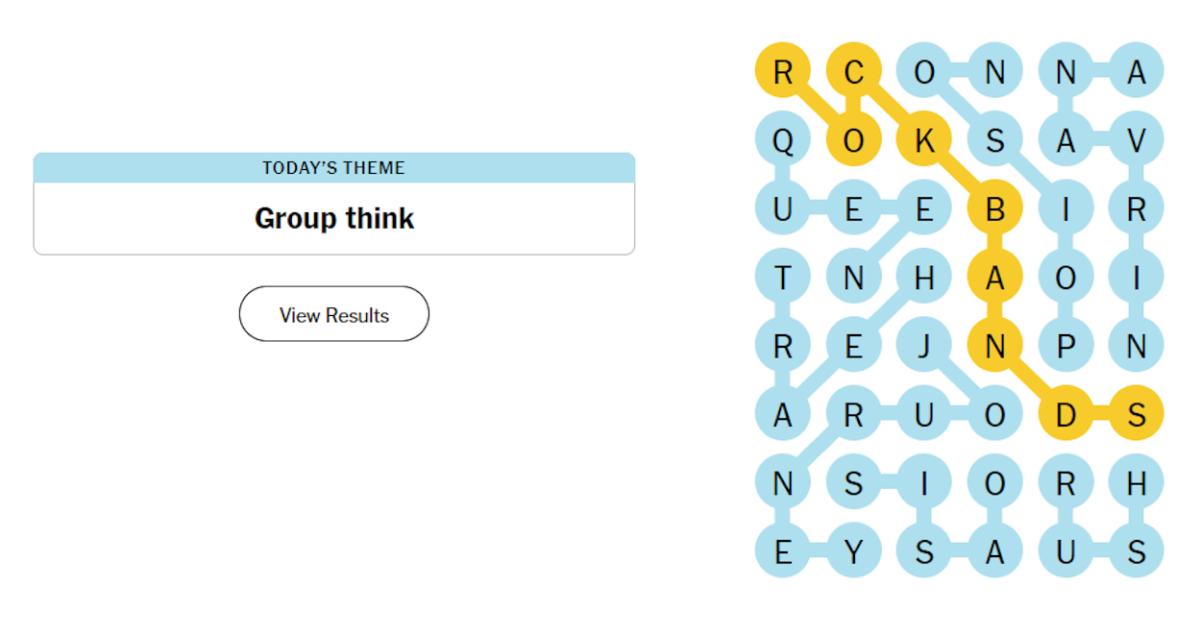

April 29th Nyt Strands Answers Game 422

Apr 29, 2025

April 29th Nyt Strands Answers Game 422

Apr 29, 2025 -

Double Trouble In Hollywood Writers And Actors Strike Brings Production To Halt

Apr 29, 2025

Double Trouble In Hollywood Writers And Actors Strike Brings Production To Halt

Apr 29, 2025

Latest Posts

-

Het Einde Van Een Icoon Thomas Mueller En Bayern Muenchen Gaan Uit Elkaar

May 12, 2025

Het Einde Van Een Icoon Thomas Mueller En Bayern Muenchen Gaan Uit Elkaar

May 12, 2025 -

Het Afscheid Van Thomas Mueller Een Bittere Pil Voor Bayern Muenchen

May 12, 2025

Het Afscheid Van Thomas Mueller Een Bittere Pil Voor Bayern Muenchen

May 12, 2025 -

De Vernedering Van Kompany Analyse Van De Mislukking

May 12, 2025

De Vernedering Van Kompany Analyse Van De Mislukking

May 12, 2025 -

Kompanys Team Lijdt Vernederende Verlies

May 12, 2025

Kompanys Team Lijdt Vernederende Verlies

May 12, 2025 -

Bitter Einde Voor Bayern Muenchen Het Vertrek Van Thomas Mueller

May 12, 2025

Bitter Einde Voor Bayern Muenchen Het Vertrek Van Thomas Mueller

May 12, 2025