Google Vs. OpenAI: A Deep Dive Into I/O And Io Differences

Table of Contents

Understanding I/O in the Context of AI

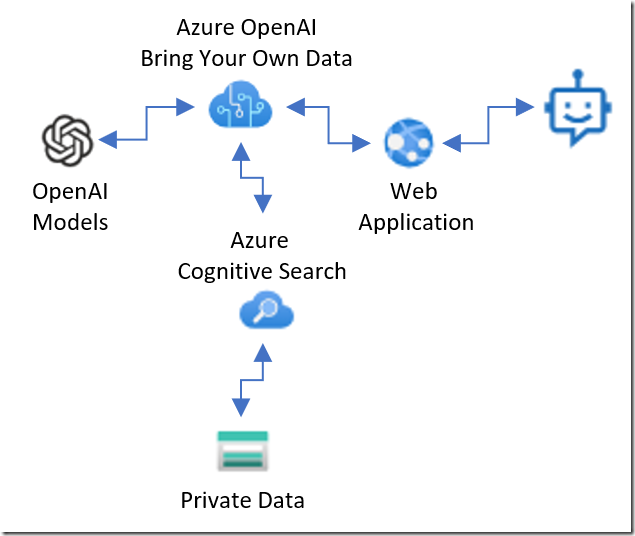

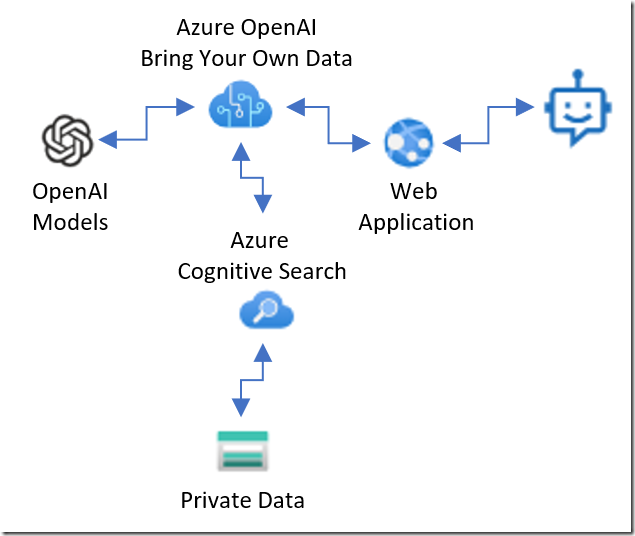

Input/output (I/O) in the context of machine learning models refers to the entire process of data movement and interaction. It encompasses data ingestion – how the model receives its training data and user inputs – data processing – the internal computations the model performs – and output generation – how the model presents its results. This includes everything from reading data from a file or database (file I/O or "io") to presenting a prediction or generating text.

Key Differences in Approach: At a high level, Google's I/O strategy emphasizes massive-scale data processing and optimization for speed and efficiency, while OpenAI prioritizes user-friendly interfaces and seamless interaction with its models through APIs. This difference stems from their differing core business models and target audiences.

- Google's emphasis on large-scale data processing and efficient I/O for its massive datasets. Google handles petabytes of data daily, requiring highly optimized I/O systems.

- OpenAI's focus on model interaction and user-friendly I/O interfaces. OpenAI prioritizes ease of use for developers and researchers interacting with its powerful models.

- The role of cloud infrastructure in shaping each company's I/O strategies. Both companies leverage cloud infrastructure, but Google's internal infrastructure is significantly larger and more integrated with its AI development, while OpenAI relies heavily on cloud providers for scalability.

Google's I/O: Scalability and Efficiency

Google's vast data centers and distributed computing infrastructure are central to its I/O strategy. This allows for unparalleled scalability and speed in handling enormous datasets. This infrastructure supports Google's various AI models and services.

-

Google's infrastructure: The sheer scale of Google's data centers enables parallel processing of massive datasets, optimizing I/O operations. This includes specialized hardware and software designed for efficient data transfer and storage.

-

TensorFlow and other frameworks: Google's TensorFlow framework, along with other internal tools, are designed to handle the complexities of large-scale I/O. They provide optimized functions for reading, writing, and processing data efficiently.

-

Examples of Google's efficient I/O solutions for handling massive datasets: Google Cloud Storage, BigQuery, and Dataflow are prime examples of services that optimize data ingestion and processing. These services are designed for high throughput and low latency.

-

Discussion of Google's advancements in distributed computing and its impact on I/O: Google's advancements in distributed computing, such as MapReduce and its successors, significantly improve I/O performance by distributing the workload across numerous machines.

-

Mention specific Google Cloud Platform (GCP) services related to I/O: GCP offers a suite of services specifically designed for efficient I/O operations, including Cloud Storage, Bigtable, and DataProc.

OpenAI's I/O: User Experience and Model Interaction

OpenAI's approach centers around providing easy-to-use APIs that enable developers to integrate its powerful models into their applications. The focus is less on raw data processing speed and more on user experience and intuitive model interaction.

-

OpenAI's API-centric approach: OpenAI's APIs abstract away much of the underlying complexity of I/O, making it simple for developers to interact with models like GPT-3 and DALL-E 2.

-

Model interaction design: OpenAI prioritizes designing intuitive prompts and clear, concise outputs. The goal is to make interacting with the AI model as seamless as possible.

-

Examples of OpenAI's API usage and the I/O involved: Sending a text prompt to the GPT-3 API and receiving a generated text response is a clear example of OpenAI's I/O workflow. Similarly, inputting an image prompt to DALL-E 2 and receiving a generated image illustrates their approach.

-

Discussion of OpenAI's focus on natural language processing and its impact on I/O design: OpenAI's focus on natural language processing (NLP) influences its I/O design, prioritizing human-readable input and output formats.

-

Comparison of the user experience of interacting with Google AI vs. OpenAI models: Google AI often involves more technical setup and complex interactions, while OpenAI aims for a more user-friendly experience through its APIs.

The Nuances of "io" (File I/O)

While we've discussed general I/O, it's crucial to address "io," often referring specifically to file input/output operations. Both Google and OpenAI rely heavily on efficient file I/O for storing, retrieving, and managing data.

-

Explain the distinction between general I/O and file I/O ("io"): General I/O encompasses all data movement, while file I/O focuses specifically on reading and writing data to and from files. File formats, compression techniques, and data organization all play a significant role in file I/O efficiency.

-

How both companies handle file I/O in their respective systems: Google uses a distributed file system optimized for high throughput, while OpenAI leverages cloud storage services for data management.

-

Differences in file formats and data handling: Google might use custom formats optimized for its internal systems, while OpenAI leans towards widely adopted formats for broader compatibility.

-

Potential efficiency gains or losses related to file I/O in each approach: Google's distributed system potentially offers greater efficiency for massive datasets, while OpenAI's approach prioritizes ease of access and compatibility.

-

Security implications of different file I/O methods: Both companies employ robust security measures, but the specifics of their file I/O systems will influence the overall security posture.

Conclusion

This deep dive into Google and OpenAI's approaches to I/O and io reveals distinct philosophies. Google prioritizes scalability and efficiency in handling massive datasets, leveraging its substantial infrastructure. OpenAI, conversely, focuses on user experience and streamlined model interaction through APIs. Understanding these differences is key for developers choosing between these platforms for their AI projects. To further your knowledge on Google vs. OpenAI I/O and io strategies, explore the resources provided by each company and continue researching the ever-evolving landscape of artificial intelligence. By understanding the nuances of I/O and io, you can make informed decisions for your next AI project.

Featured Posts

-

Nicki Chapmans 700 000 Country Home Investment A Property Success Story

May 25, 2025

Nicki Chapmans 700 000 Country Home Investment A Property Success Story

May 25, 2025 -

Ohnotheydidnts Hunger Games Live Journal A Deep Dive

May 25, 2025

Ohnotheydidnts Hunger Games Live Journal A Deep Dive

May 25, 2025 -

Porsche Elektromobiliu Ikrovimo Centrai Naujausi Atnaujinimai Europoje

May 25, 2025

Porsche Elektromobiliu Ikrovimo Centrai Naujausi Atnaujinimai Europoje

May 25, 2025 -

Seeking Change Facing Punishment A Look At Reprisal For Reform

May 25, 2025

Seeking Change Facing Punishment A Look At Reprisal For Reform

May 25, 2025 -

Laurent Baffie Ardisson Defend Ses Blagues Controversees

May 25, 2025

Laurent Baffie Ardisson Defend Ses Blagues Controversees

May 25, 2025