Investigating The Use Of AI Therapy As A Surveillance Mechanism

Table of Contents

Data Collection and Privacy Concerns in AI Therapy

AI therapy platforms, including chatbots and virtual assistants, collect vast amounts of sensitive data. This data often includes personal information like name, age, and location, but more significantly, it also encompasses detailed mental health histories, treatment plans, and intimate conversational exchanges. This sensitive data is a treasure trove for potential misuse and raises significant privacy concerns.

The potential for data breaches and unauthorized access is substantial. While many platforms claim robust security measures, the reality is that no system is impenetrable. A single breach could expose highly personal and vulnerable information to malicious actors, leading to identity theft, blackmail, and irreparable damage to individuals' lives. Furthermore, a lack of transparency surrounding data usage and sharing practices leaves users in the dark about how their information is being utilized and with whom it might be shared.

- Data security measures implemented by different AI therapy platforms: Vary widely, with some offering stronger encryption and security protocols than others. A critical evaluation of these measures is crucial for users.

- Potential for data manipulation and misuse: Data could be misused for targeted advertising, profiling, or even manipulation by third parties.

- Regulations and laws surrounding data privacy in the context of AI therapy: Current data privacy regulations like GDPR and HIPAA offer some protection, but their application to AI therapy is often unclear and may be insufficient.

- Comparison of data privacy policies across different platforms: A comparative analysis reveals significant discrepancies, highlighting the need for standardized and transparent practices.

Algorithmic Bias and Discrimination in AI Therapy

Algorithms powering AI therapy platforms are trained on massive datasets, and these datasets often reflect existing societal biases. This means the AI may perpetuate and even amplify existing inequalities in mental healthcare access and quality. For instance, an algorithm trained primarily on data from one demographic group might misinterpret or fail to accurately diagnose conditions in individuals from other backgrounds. This could lead to inaccurate diagnoses, inappropriate treatment plans, and the exacerbation of health disparities for marginalized communities.

- Examples of algorithmic bias in AI systems used for mental health: Studies have shown biases related to race, gender, and socioeconomic status in AI diagnostic tools.

- The role of training data in shaping AI therapy's outputs: The quality, diversity, and representativeness of the training data directly influence the fairness and accuracy of the AI's responses.

- Methods for mitigating algorithmic bias in AI therapy development: Rigorous testing, diverse datasets, and ongoing monitoring are essential for minimizing bias.

- The need for diversity and inclusion in AI therapy research and development: Ensuring diverse teams involved in the development and evaluation of AI therapy systems is critical to mitigating bias.

The Potential for AI Therapy to be Used as a Surveillance Tool

The data collected by AI therapy platforms represents a potent resource for surveillance. This information could be misused by employers, insurance companies, or even government agencies to monitor individuals' mental health without their consent. Such surveillance could lead to discrimination in employment, insurance coverage, or even legal proceedings. Furthermore, it poses a serious threat to freedom of expression and thought, as individuals may self-censor their thoughts and feelings for fear of repercussions.

- Examples of potential misuse of AI therapy data by employers, government agencies, or insurance companies: Imagine scenarios where job applications are rejected based on AI-detected anxiety levels, or insurance premiums are raised due to AI-identified mental health concerns.

- The erosion of patient-therapist confidentiality: The traditional confidential relationship between patient and therapist is fundamentally undermined when AI systems are involved and data is stored and accessed by third parties.

- The need for robust ethical guidelines and regulations: Strong ethical guidelines and regulations are needed to prevent the misuse of AI therapy data for surveillance.

- The potential for manipulation and control through AI therapy: In the wrong hands, AI therapy could be used to manipulate individuals' thoughts and behaviors, raising serious ethical concerns.

The Role of Government Regulation and Oversight

The current regulatory landscape surrounding AI therapy is fragmented and insufficient. Stronger regulations are urgently needed to protect user privacy and prevent the misuse of this technology as a surveillance tool. This requires a multi-pronged approach involving data protection laws, ethical guidelines for AI development, and independent oversight bodies.

- Examples of existing data privacy regulations (e.g., GDPR, HIPAA): While these provide a foundation, they need specific adaptations to address the unique challenges of AI therapy.

- Gaps in current regulations specific to AI therapy: The lack of clear guidelines regarding data ownership, consent, and data sharing practices leaves significant vulnerabilities.

- Recommendations for improving data security and transparency: Mandatory security audits, transparent data usage policies, and user-friendly data access controls are crucial.

- The importance of interdisciplinary collaboration in establishing ethical guidelines: Collaboration between ethicists, technologists, mental health professionals, and policymakers is needed to create effective regulations.

Addressing the Surveillance Risks of AI Therapy

The potential for AI therapy to be used as a surveillance mechanism is a serious concern. We have examined the significant privacy risks associated with data collection, the potential for algorithmic bias to exacerbate existing inequalities, and the alarming lack of robust regulations. Protecting user autonomy, privacy, and freedom from unwarranted surveillance is paramount. We must engage in a critical discussion of AI therapy's potential for misuse, demand greater transparency in AI therapy data practices, and advocate for responsible development and deployment of AI therapy. The future of mental healthcare depends on it. Let's work together to ensure that AI therapy remains a tool for healing, not a weapon of surveillance.

Featured Posts

-

Colorado Rapids Defeat Opponent Calvin Harris Cole Bassett Goals Steffens Strong Performance

May 16, 2025

Colorado Rapids Defeat Opponent Calvin Harris Cole Bassett Goals Steffens Strong Performance

May 16, 2025 -

Dodgers Left Handed Hitters Overcoming The Slump And Returning To Form

May 16, 2025

Dodgers Left Handed Hitters Overcoming The Slump And Returning To Form

May 16, 2025 -

Jimmy Butlers Injury Fan Reactions And Game 4 Implications

May 16, 2025

Jimmy Butlers Injury Fan Reactions And Game 4 Implications

May 16, 2025 -

Chinas Strategic Approach To Securing A Us Agreement

May 16, 2025

Chinas Strategic Approach To Securing A Us Agreement

May 16, 2025 -

The Economic Fallout How Trumps Tariffs Cost California 16 Billion

May 16, 2025

The Economic Fallout How Trumps Tariffs Cost California 16 Billion

May 16, 2025

Latest Posts

-

Colorado Rapids Defeat Opponent Calvin Harris Cole Bassett Goals Steffens Strong Performance

May 16, 2025

Colorado Rapids Defeat Opponent Calvin Harris Cole Bassett Goals Steffens Strong Performance

May 16, 2025 -

Lafc Prioritizes Mls San Jose Game A Key Indicator

May 16, 2025

Lafc Prioritizes Mls San Jose Game A Key Indicator

May 16, 2025 -

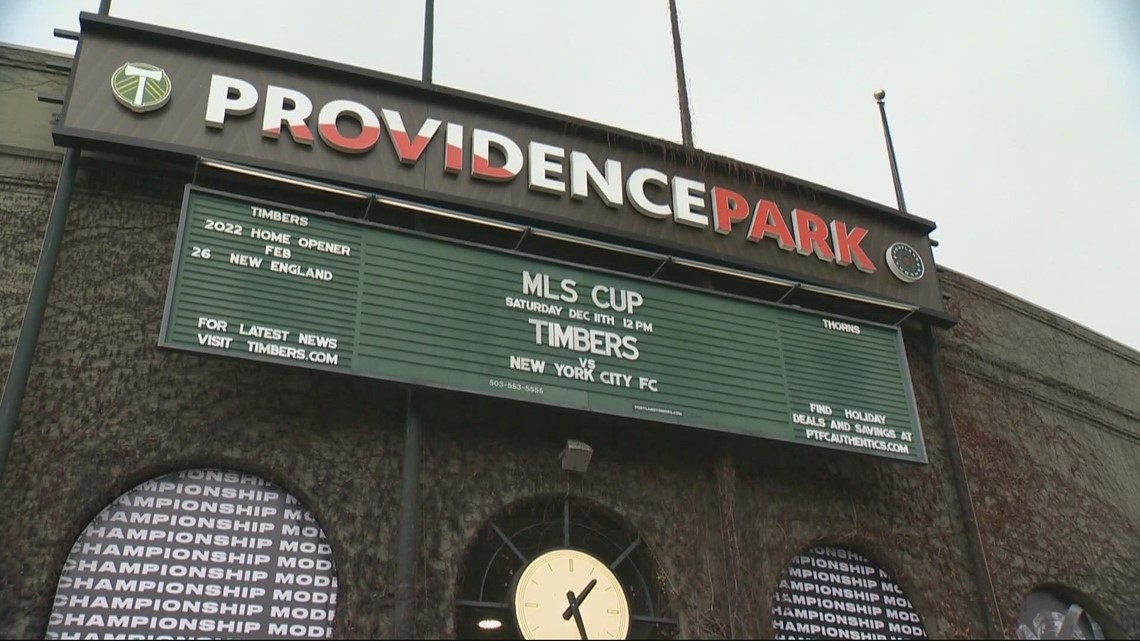

Shorthanded Timbers Fall To San Jose Ending Seven Match Unbeaten Run

May 16, 2025

Shorthanded Timbers Fall To San Jose Ending Seven Match Unbeaten Run

May 16, 2025 -

San Jose Vs Lafc Mls Match Highlights Lafcs Shift In Priorities

May 16, 2025

San Jose Vs Lafc Mls Match Highlights Lafcs Shift In Priorities

May 16, 2025 -

Portland Timbers Unbeaten Streak Ends At Seven Games Against San Jose

May 16, 2025

Portland Timbers Unbeaten Streak Ends At Seven Games Against San Jose

May 16, 2025