OpenAI's 2024 Developer Event: Easier Voice Assistant Creation

Table of Contents

Streamlined Development Tools and APIs

OpenAI's commitment to simplifying voice assistant creation is evident in its advancements to development tools and APIs. The goal is to make the process more accessible to a wider range of developers, regardless of their experience level.

Simplified API Access

OpenAI is expected to unveil new and improved APIs designed for easier integration of voice recognition, natural language processing (NLP), and speech synthesis capabilities. This will significantly reduce the technical hurdles associated with voice assistant development.

- Reduced complexity of API calls: Expect cleaner, more intuitive API calls, reducing the amount of code needed for basic functionalities.

- Improved documentation: Comprehensive and easily navigable documentation will make it easier for developers to understand and utilize the APIs effectively.

- Enhanced error handling: More robust error handling will help developers quickly identify and resolve issues, speeding up the development process.

- Pre-built templates for common voice assistant functions: Pre-built templates for common tasks, like setting reminders or playing music, will allow developers to quickly build core functionality.

The improvements extend beyond simplicity. Developers can also expect lower latency for faster responses, increased accuracy in speech recognition and NLP, and support for a wider range of languages, making global deployment easier.

Pre-trained Models for Faster Development

One of the biggest time-savers in voice assistant creation is the use of pre-trained models. OpenAI is focusing on providing a robust library of these models, significantly reducing the need for extensive data training from scratch.

- Availability of models optimized for various voice assistant tasks: Expect models specifically designed for intent recognition, dialogue management, and other crucial voice assistant functions.

- Ease of customization with fine-tuning: While pre-trained models offer a significant head start, the ability to fine-tune them for specific needs is crucial. OpenAI will likely provide tools for easy customization.

Using pre-trained models drastically reduces development time and resource consumption compared to building models from scratch. This allows developers to focus on the unique aspects of their voice assistant, rather than getting bogged down in foundational model training.

Enhanced Natural Language Understanding (NLU)

The ability of a voice assistant to truly understand user requests is paramount. OpenAI's advancements in NLU are set to make voice assistants far more intuitive and responsive.

Improved Contextual Awareness

OpenAI is pushing the boundaries of contextual understanding in voice assistants. This means assistants will be better able to follow conversations, understand nuanced requests, and maintain context over multiple turns of dialogue.

- Advanced dialogue management capabilities: Expect improved ability to handle complex conversations, remembering previous interactions and using that information to inform future responses.

- Improved handling of ambiguities and complex queries: Voice assistants will be better equipped to decipher ambiguous requests and deal with complex, multi-part queries.

- Better understanding of user intent even with noisy input: The ability to understand user intent even with background noise or imperfect speech is a significant advancement.

These improvements are powered by advancements in transformer models and improved entity recognition, allowing for a more natural and fluid conversational experience.

Multi-lingual Support and Customization

OpenAI's commitment to making voice assistants globally accessible is reflected in its focus on multi-lingual support and customization.

- Support for a wider range of languages: Expect broader language support, opening up voice assistant development to a global audience.

- Simplified process for adding support for new languages: OpenAI is likely to provide tools and resources that simplify the process of adding support for new languages.

- Tools for customizing the personality and voice of the assistant: The ability to customize the personality and voice of the assistant allows developers to create unique and branded experiences.

Multilingual support is critical for reaching a wider audience, and the ability to customize the personality allows for greater brand consistency and user engagement.

Integration with Existing Platforms and Services

Seamless integration with existing platforms and services is key to the success of any voice assistant. OpenAI aims to make this process as straightforward as possible.

Seamless Integration with Popular Platforms

OpenAI is expected to make integrating voice assistants with popular platforms incredibly easy.

- SDKs and libraries for easy integration: Expect readily available SDKs and libraries for easy integration with iOS, Android, and other popular platforms.

- Pre-built integrations with popular cloud services: Pre-built integrations with cloud services will streamline the deployment and management of voice assistants.

- Compatibility with various hardware platforms: Broad hardware compatibility will allow developers to deploy their voice assistants on a variety of devices.

This ease of integration saves developers significant time and effort, allowing them to focus on the core functionality of their voice assistant.

Support for Third-Party Services and APIs

The ability to incorporate features from third-party services is crucial for creating rich and functional voice assistants.

- Simplified integration with payment gateways, calendar applications, and other services: OpenAI will likely provide tools and documentation to simplify the integration process.

- Robust documentation and support for developers: Comprehensive documentation and support will assist developers in seamlessly integrating third-party services.

By easily integrating third-party services, developers can create voice assistants with a wider range of functionalities, enhancing the overall user experience.

Conclusion

OpenAI's 2024 developer event is poised to significantly lower the barrier to entry for voice assistant creation. The streamlined tools, enhanced NLU capabilities, and improved platform integrations will empower developers to build more sophisticated and user-friendly voice assistants faster than ever before. Don't miss the opportunity to learn more about these groundbreaking advancements and start building your own innovative voice assistant solutions. Learn more about simplifying your voice assistant creation process at the OpenAI 2024 developer event! Discover how to leverage these new tools for efficient voice assistant development, and unlock the potential of creating truly groundbreaking voice assistant applications.

Featured Posts

-

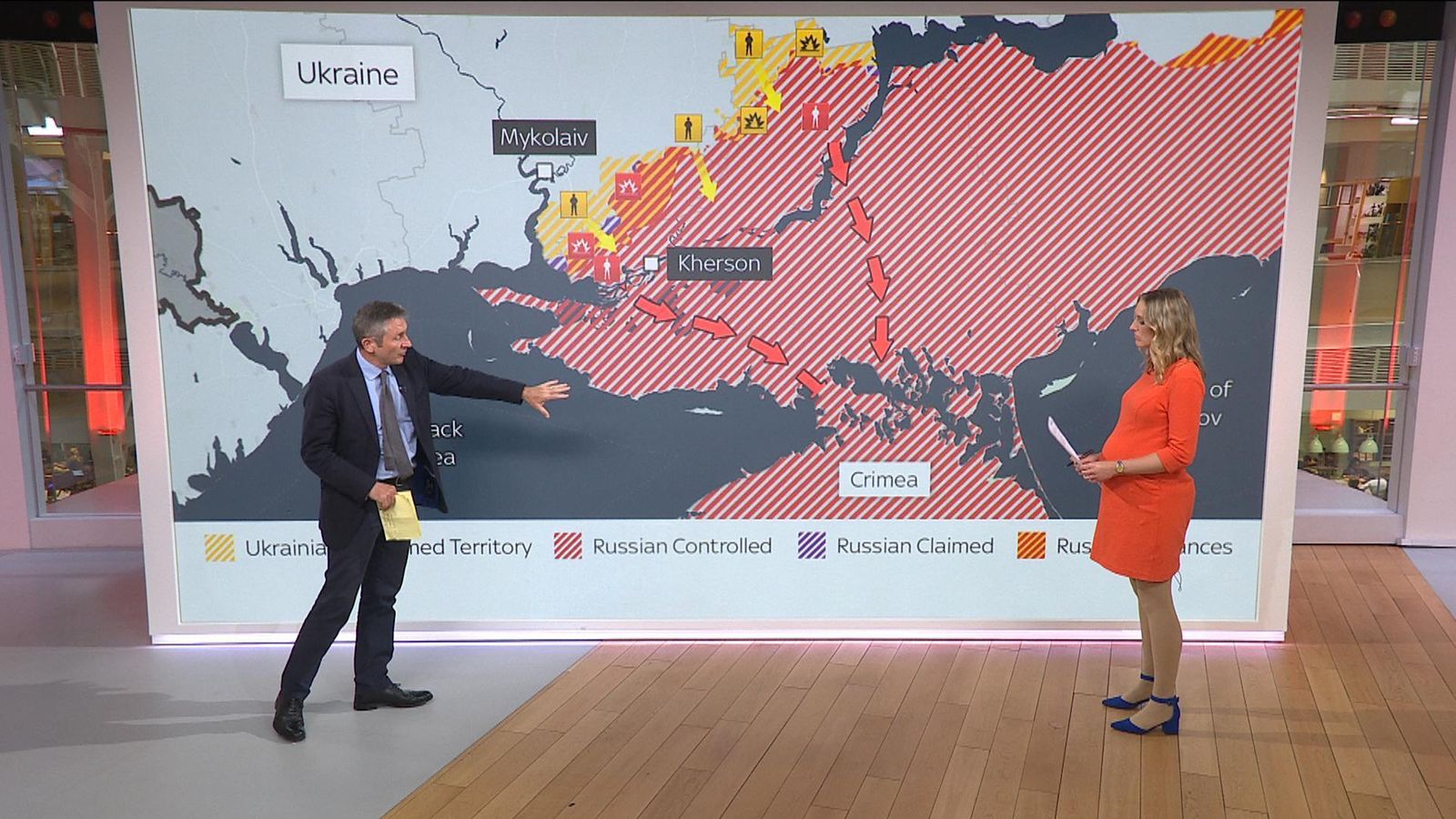

Russias Renewed Offensive Ukraine Faces Deadly Aerial Attacks Us Pushes For Peace

Apr 22, 2025

Russias Renewed Offensive Ukraine Faces Deadly Aerial Attacks Us Pushes For Peace

Apr 22, 2025 -

The China Factor Obstacles And Opportunities For Bmw Porsche And Other Automakers

Apr 22, 2025

The China Factor Obstacles And Opportunities For Bmw Porsche And Other Automakers

Apr 22, 2025 -

Russias Easter Truce Ends Renewed Fighting In Ukraine

Apr 22, 2025

Russias Easter Truce Ends Renewed Fighting In Ukraine

Apr 22, 2025 -

Anchor Brewing Companys Closure A Legacy In Beer Comes To An End

Apr 22, 2025

Anchor Brewing Companys Closure A Legacy In Beer Comes To An End

Apr 22, 2025 -

Ryujinx Emulator Shuts Down Following Nintendo Contact

Apr 22, 2025

Ryujinx Emulator Shuts Down Following Nintendo Contact

Apr 22, 2025