OpenAI's 2024 Developer Event: Easier Voice Assistant Development

Table of Contents

Simplified APIs for Seamless Integration

OpenAI's 2024 event showcased a major push towards simplifying the APIs crucial for voice assistant development. This makes integrating complex functionalities significantly easier, leading to faster development cycles and more robust applications.

Streamlined Natural Language Processing (NLP)

OpenAI showcased new APIs designed for easier NLP integration, dramatically reducing the complexity of building conversational AI. These advancements translate to more intuitive and responsive voice assistants.

- Improved accuracy in speech-to-text transcription: The new APIs boast significantly improved accuracy, even in noisy environments or with diverse accents. This reduces the need for extensive error correction, streamlining the development workflow.

- Enhanced natural language understanding for more context-aware responses: The enhanced NLP capabilities allow voice assistants to understand context better, leading to more natural and relevant conversations. This is crucial for building truly intelligent voice assistants that can handle complex user requests.

- Simplified API calls for faster development cycles: OpenAI has simplified the API calls, making them easier to understand and implement. This reduces the development time and allows developers to focus on the core functionality of their voice assistants rather than wrestling with complex API integrations.

Enhanced Speech Synthesis for Lifelike Interactions

The event highlighted advancements in text-to-speech (TTS) technology, resulting in more natural and expressive voice assistant interactions. This is a key factor in creating user-friendly and engaging voice experiences.

- More realistic intonation and prosody: The improved TTS technology now incorporates more realistic intonation and prosody, making the synthesized speech sound more human-like and less robotic.

- Support for multiple languages and accents: Developers can now easily build voice assistants capable of interacting with users in multiple languages and accents, expanding the reach and accessibility of their applications.

- Customization options for voice personality and tone: OpenAI's new tools allow for greater customization of the voice assistant's personality and tone, enabling developers to create unique and engaging voice experiences tailored to their specific application.

Powerful New Tools for Voice Assistant Development

Beyond API improvements, OpenAI introduced several powerful new tools specifically designed to accelerate voice assistant development. These tools significantly lower the barrier to entry for developers of all skill levels.

Pre-trained Models and Templates

OpenAI unveiled pre-trained models and customizable templates to drastically accelerate the development process. This reduces the need for extensive coding from scratch, empowering developers to focus on innovation rather than repetitive tasks.

- Faster prototyping and iteration: Pre-trained models allow developers to quickly prototype and iterate on their voice assistant designs, accelerating the development cycle and enabling rapid experimentation.

- Reduced development costs and time-to-market: By leveraging pre-trained models and templates, developers can significantly reduce their development costs and time-to-market, making their applications more competitive.

- Access to cutting-edge AI models without in-depth AI expertise: Even developers without deep AI expertise can now access and utilize cutting-edge AI models, broadening the pool of individuals capable of developing sophisticated voice assistants.

Improved Debugging and Monitoring Tools

New debugging and monitoring tools were presented to streamline the testing and optimization of voice assistants. These tools provide valuable insights into the performance of voice assistants, allowing for quicker problem solving.

- Real-time feedback on performance metrics: Real-time feedback on key performance indicators (KPIs) enables developers to identify and address potential issues promptly.

- Simplified identification and resolution of errors: The improved debugging tools simplify the process of identifying and resolving errors, speeding up the development process.

- Improved user experience through better error handling: Better error handling leads to a more seamless and positive user experience, which is crucial for the success of any voice assistant.

Expanding the Ecosystem for Voice Assistant Development

OpenAI is actively fostering a vibrant community around voice assistant development. This collaborative environment is vital for continued innovation and knowledge sharing.

Enhanced Community Support and Documentation

OpenAI emphasized strengthening its community resources, including improved documentation and expanded support forums for voice assistant developers. This is essential for ensuring the success and growth of the entire ecosystem.

- Easier access to knowledge and expertise: Improved documentation and readily available resources make it easier for developers to find answers to their questions and access valuable expertise.

- Facilitated collaboration among developers: OpenAI is promoting collaboration among developers through online forums and communities, fostering knowledge sharing and accelerating innovation.

- Promotes faster problem-solving and knowledge sharing: The enhanced community support enables faster problem-solving and knowledge sharing, ultimately benefiting the entire voice assistant development community.

Conclusion

OpenAI's 2024 developer event significantly advances the field of voice assistant development, offering streamlined APIs, powerful new tools, and strengthened community support. The advancements in NLP, speech synthesis, and development resources promise to make creating sophisticated voice assistants accessible to a wider range of developers. These improvements will undoubtedly accelerate innovation and lead to a new generation of more intuitive and user-friendly voice-activated applications. Are you ready to revolutionize your projects with easier voice assistant development? Explore OpenAI's new tools and resources today and embark on your journey to creating cutting-edge voice experiences!

Featured Posts

-

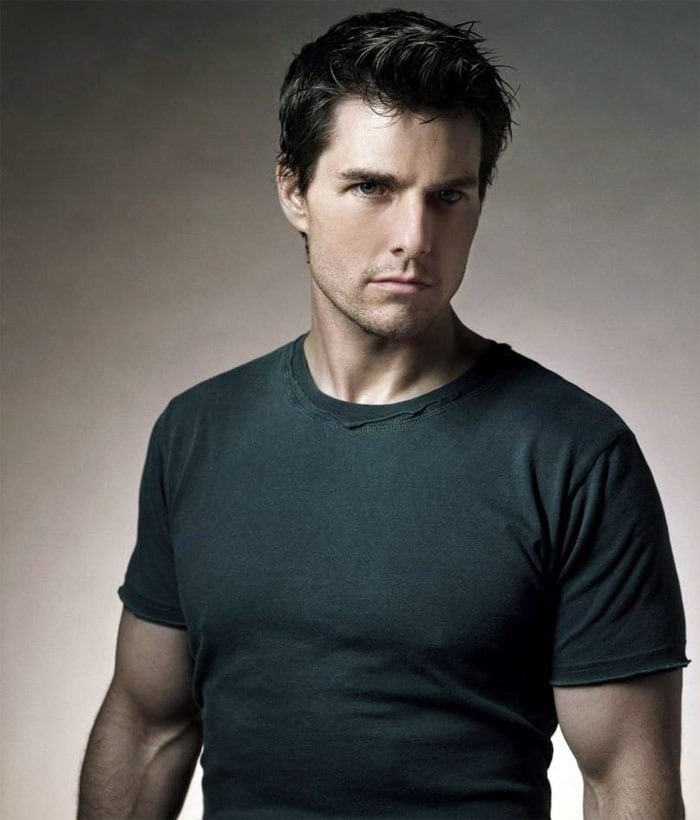

Tom Cruise Still Owes Tom Hanks 1 Will He Ever Pay Up

May 11, 2025

Tom Cruise Still Owes Tom Hanks 1 Will He Ever Pay Up

May 11, 2025 -

Exportaciones Ganaderas Uruguayas El Inusual Regalo A China Que Podria Cambiar Todo

May 11, 2025

Exportaciones Ganaderas Uruguayas El Inusual Regalo A China Que Podria Cambiar Todo

May 11, 2025 -

District Final Archbishop Bergan Triumphs Over Norfolk Catholic

May 11, 2025

District Final Archbishop Bergan Triumphs Over Norfolk Catholic

May 11, 2025 -

Nba Sixth Man Of The Year Payton Pritchard Makes History For The Celtics

May 11, 2025

Nba Sixth Man Of The Year Payton Pritchard Makes History For The Celtics

May 11, 2025 -

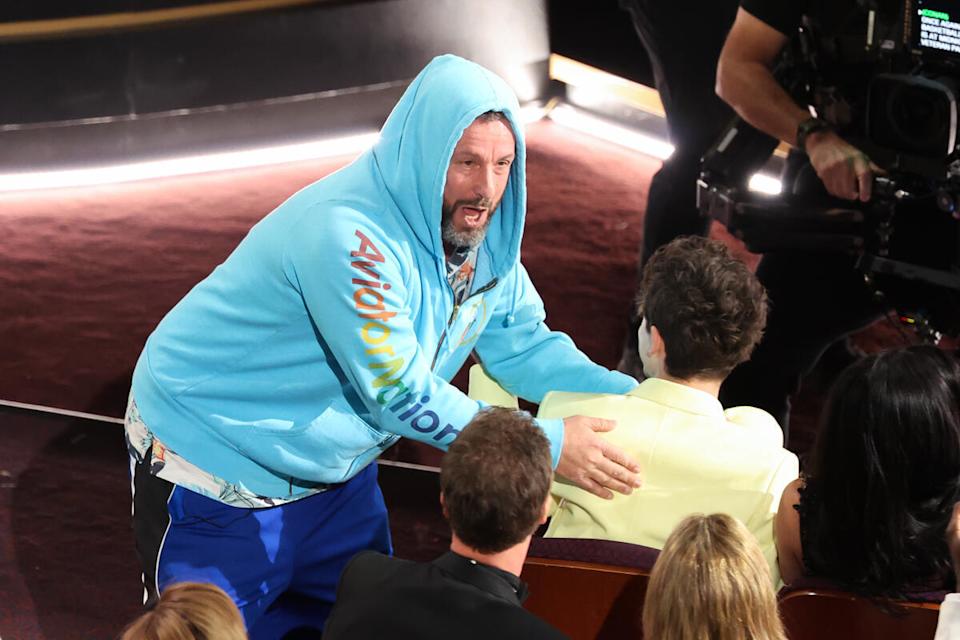

Oscars 2025 Adam Sandlers Unexpected Cameo Outfit Joke And Chalamet Interaction Explained

May 11, 2025

Oscars 2025 Adam Sandlers Unexpected Cameo Outfit Joke And Chalamet Interaction Explained

May 11, 2025