Responsible AI: Addressing The Limitations Of AI Learning

Table of Contents

Data Bias and its Impact on AI Learning

Data bias is a pervasive problem in AI, significantly impacting the fairness and accuracy of AI systems. Bias refers to systematic errors in datasets that reflect and amplify existing societal prejudices. These biases can stem from various sources, leading to unfair or discriminatory outcomes. For example, facial recognition systems trained on datasets lacking diverse representation often perform poorly on individuals with darker skin tones, while biased loan application algorithms might disproportionately deny credit to certain demographic groups.

- Insufficient representation of diverse groups in datasets: Many datasets used to train AI models underrepresent certain demographics, leading to algorithms that perform poorly or unfairly for those underrepresented groups. This lack of diversity directly contributes to biased outcomes.

- Historical biases embedded in data sources: Data often reflects historical societal biases, perpetuating inequalities. For instance, datasets reflecting historical hiring practices might contain biases that lead to discriminatory AI recruitment tools.

- Algorithmic amplification of existing biases: Even with seemingly unbiased data, algorithms can amplify existing subtle biases, leading to significant discriminatory effects. This is a complex issue requiring careful attention to algorithm design and testing.

Solutions to mitigate data bias include:

- Data augmentation: Artificially increasing the representation of underrepresented groups in datasets through techniques like data synthesis.

- Bias detection algorithms: Developing algorithms to identify and quantify bias within datasets and models.

- Diverse and representative datasets: Actively curating datasets that accurately reflect the diversity of the population the AI system will interact with.

The Problem of Explainability in AI (Explainable AI or XAI)

Many AI models, particularly deep learning systems, operate as "black boxes," making it difficult to understand how they arrive at their decisions. This lack of transparency poses significant challenges for trust, accountability, and debugging. Understanding the reasoning behind an AI's prediction is crucial, especially in high-stakes applications like healthcare and finance.

- Lack of transparency hinders trust and accountability: Without understanding how an AI system works, it is difficult to trust its outputs, especially when those outputs have significant consequences.

- Difficult to identify and correct errors or biases in opaque models: If the decision-making process is opaque, identifying and correcting errors or biases becomes extremely challenging.

- Regulatory requirements for explainability in high-stakes applications: Increasingly, regulators are demanding explainability in AI systems used in sensitive areas, requiring developers to provide insight into how their models work.

Solutions to improve explainability include:

- Developing more transparent models: Designing AI models with inherent transparency, allowing for easier understanding of their decision-making processes.

- Incorporating explainable AI techniques (XAI): Utilizing techniques that provide insights into the factors influencing an AI's decisions.

- Providing model summaries: Generating human-readable summaries of the model's behavior and key features.

Addressing the Risks of AI-Driven Automation and Job Displacement

AI-driven automation has the potential to transform industries, but it also carries the risk of significant job displacement. This necessitates careful consideration of the economic and social consequences of widespread automation. While automation can increase efficiency and productivity, it's crucial to mitigate the negative impacts on workers.

- Need for retraining and upskilling programs: Investing in programs that help workers acquire the skills needed for jobs in the evolving economy.

- Exploration of alternative economic models to address job displacement: Considering models like universal basic income or job guarantees to address potential widespread unemployment.

- Ethical considerations surrounding the use of automation: Addressing ethical concerns around the use of automation, particularly in relation to worker rights and fairness.

Solutions to mitigate the risks of job displacement include:

- Investing in education and workforce development: Providing opportunities for workers to acquire new skills and adapt to changing job markets.

- Supporting entrepreneurship and innovation: Fostering the creation of new businesses and industries that can absorb displaced workers.

- Promoting responsible automation strategies: Developing strategies that prioritize human well-being and minimize job losses.

Ensuring Data Privacy and Security in AI Systems

AI systems rely on vast amounts of data, raising significant concerns about privacy and security. Data breaches, misuse of personal information, and privacy violations are serious risks that must be addressed. Protecting user data is paramount for building responsible AI systems.

- Importance of robust data security protocols and encryption: Implementing strong security measures to protect data from unauthorized access and breaches.

- Adherence to data privacy regulations (e.g., GDPR, CCPA): Complying with relevant data privacy regulations to ensure responsible data handling.

- Implementing anonymization and data minimization techniques: Utilizing techniques to protect individual privacy while still allowing for data analysis.

Solutions to ensure data privacy and security include:

- Strengthening data governance frameworks: Developing robust frameworks for managing and protecting data throughout its lifecycle.

- Investing in cybersecurity measures: Implementing advanced security technologies and practices to protect data from cyber threats.

- Promoting responsible data handling practices: Educating individuals and organizations on responsible data handling and privacy protection.

Conclusion

The development and deployment of AI systems require a careful and responsible approach. Addressing the limitations of AI learning—including data bias, explainability challenges, job displacement risks, and data privacy concerns—is not merely a technical issue but a societal imperative. By proactively mitigating these risks and fostering transparency, accountability, and ethical considerations throughout the AI lifecycle, we can unlock the transformative potential of AI while minimizing its potential harms. Let's work together to build a future where responsible AI drives progress for the benefit of all. Learn more about creating and implementing responsible AI solutions today!

Featured Posts

-

Is Miley Cyrus Cutting Ties With Billy Ray Cyrus A Look At The Family Conflict

May 31, 2025

Is Miley Cyrus Cutting Ties With Billy Ray Cyrus A Look At The Family Conflict

May 31, 2025 -

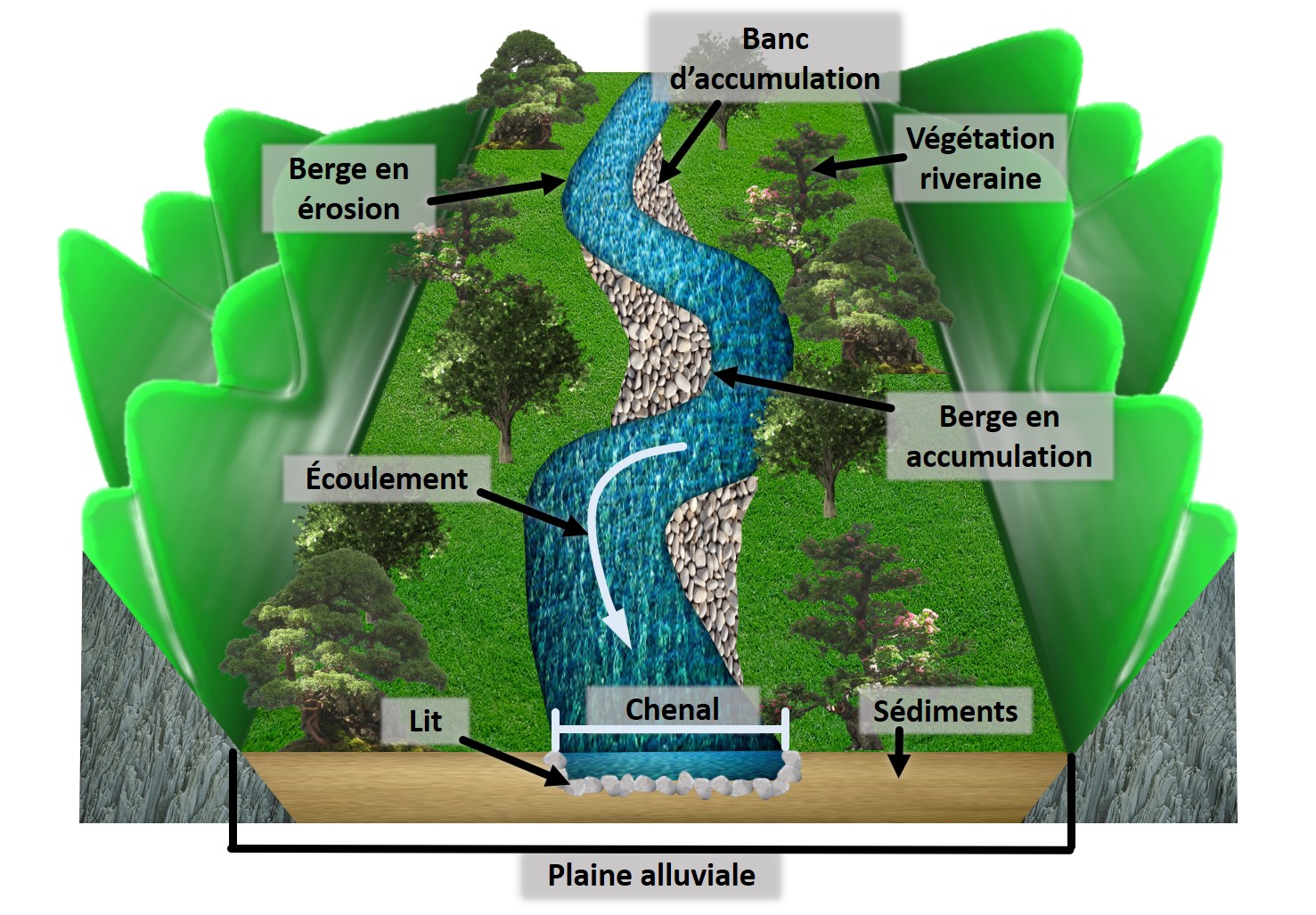

Etude De L Ingenierie Castor Dans Deux Cours D Eau De La Drome

May 31, 2025

Etude De L Ingenierie Castor Dans Deux Cours D Eau De La Drome

May 31, 2025 -

Everything You Need To Know About The Jn 1 Covid 19 Variant

May 31, 2025

Everything You Need To Know About The Jn 1 Covid 19 Variant

May 31, 2025 -

Your Guide To Buying Glastonbury Resale Tickets Timing Cost And Tips

May 31, 2025

Your Guide To Buying Glastonbury Resale Tickets Timing Cost And Tips

May 31, 2025 -

Dragons Den Investment Fact Vs Fiction

May 31, 2025

Dragons Den Investment Fact Vs Fiction

May 31, 2025