The Limits Of AI Learning: Practical Implications For Responsible AI Use

Table of Contents

Data Bias and its Impact on AI Performance

AI models learn from data. If that data is biased, the resulting AI system will inevitably reflect and amplify those biases. This is a critical aspect of the limits of AI learning. Biased training data leads to biased AI outputs, perpetuating and even exacerbating existing societal inequalities.

For example, facial recognition systems have demonstrated significant biases against people of color, leading to misidentification and wrongful arrests. Similarly, AI-powered loan applications may discriminate against certain demographic groups, while AI-driven hiring processes can perpetuate gender or racial biases.

- Insufficient or unrepresentative datasets: If the data used to train an AI model doesn't accurately represent the real-world population, the model will be unable to generalize effectively and will likely produce biased results.

- Amplification of existing societal biases: AI systems can unconsciously amplify existing societal biases present in the data they are trained on, leading to discriminatory outcomes.

- Consequences of biased AI decisions: Biased AI decisions can lead to unfair treatment, discrimination, and a perpetuation of existing inequalities. This affects access to opportunities and resources, impacting individuals and communities disproportionately.

- Mitigation strategies: Addressing data bias requires proactive measures such as data augmentation (adding more diverse data), employing bias detection algorithms to identify and mitigate biases in datasets, and ensuring diverse and representative datasets are used for training.

The Problem of Explainability in AI (Black Box Problem)

Many AI systems, particularly deep learning models, operate as "black boxes." This means it's difficult or impossible to understand how they arrive at their conclusions. This lack of transparency poses significant challenges for trust, accountability, and debugging. It represents a core limitation of AI learning that needs to be addressed.

The inability to understand an AI's decision-making process has serious implications. It makes it challenging to identify and correct errors, hindering improvements in model accuracy and reliability. Furthermore, this opacity can impact regulatory compliance and legal liability, making it difficult to determine responsibility when an AI system makes a mistake.

- Challenges in interpreting complex AI models: Understanding the internal workings of complex AI models, especially deep neural networks, is often a significant hurdle.

- Difficulty in identifying and correcting errors: Without understanding the reasoning behind an AI's decision, identifying and correcting errors is a laborious, time-consuming process.

- Impact on regulatory compliance and legal liability: The lack of explainability can make it difficult to meet regulatory requirements and determine liability in cases of AI-related harm.

- Approaches to improve explainability: Researchers are actively developing techniques for Explainable AI (XAI), such as feature importance analysis, to shed light on the decision-making processes of AI systems.

Generalization and the Limits of Transfer Learning

Generalization – the ability of an AI model to perform well on unseen data – is crucial for its practical application. However, training AI models that generalize well across different contexts and domains remains a significant challenge. This is another key limitation of AI learning.

Overfitting, where a model performs well on training data but poorly on new data, is a common problem. Transfer learning, the process of adapting a model trained on one task to another, can be helpful but has its limitations. It doesn't always work effectively, especially when the source and target domains are significantly different.

- Overfitting to training data: Models that overfit learn the training data too well, failing to generalize to new, unseen data.

- Difficulty in adapting models to new scenarios: AI models often struggle to adapt to new contexts or situations that differ significantly from their training data.

- Limitations of transfer learning: While transfer learning can be effective, it's not a guaranteed solution and its success depends heavily on the similarity between the source and target domains.

- Strategies for improving generalization: Techniques like data augmentation, regularization (methods that prevent overfitting), and the development of robust model architectures can help improve generalization capabilities.

The Limitations of Current AI in Handling Nuance and Context

Current AI systems often struggle with nuanced language, context, and common sense reasoning. They frequently misinterpret sarcasm, irony, and figurative language, leading to inaccurate or nonsensical results. This lack of contextual understanding highlights a significant limitation of current AI learning.

For instance, an AI chatbot might fail to understand the intended meaning of a sarcastic remark, leading to an inappropriate or unhelpful response. Similarly, AI systems can struggle with tasks requiring common sense reasoning or an understanding of implicit information.

- Difficulties with sarcasm, irony, and figurative language: Understanding the subtleties of human language, including figures of speech, remains a major challenge for AI.

- Challenges in handling ambiguity and uncertainty: AI systems often struggle with ambiguous situations or uncertain information, leading to incorrect or incomplete interpretations.

- Need for better integration of common sense knowledge into AI systems: Integrating common sense knowledge into AI systems is a crucial step towards improving their ability to handle nuanced situations and understand context.

Conclusion: Responsible AI Development and Deployment

The limits of AI learning – data bias, lack of explainability, generalization challenges, and difficulties with nuance and context – are significant factors to consider when developing and deploying AI systems. Understanding these limitations is not merely an academic exercise; it's crucial for responsible AI development and deployment. Deploying AI systems without acknowledging and addressing these limitations has ethical implications, potentially leading to unfair or discriminatory outcomes.

To ensure the ethical and effective use of AI, we must prioritize transparency, fairness, and accountability. This includes focusing on diverse and representative datasets, developing more explainable AI models, and striving to create AI systems that can handle nuance and context more effectively. We must continue to push forward the boundaries of AI research, focusing on addressing the limits of AI learning. Learn more about responsible AI, AI ethics, and mitigating AI bias. Let's work together to create a future where AI benefits everyone.

Featured Posts

-

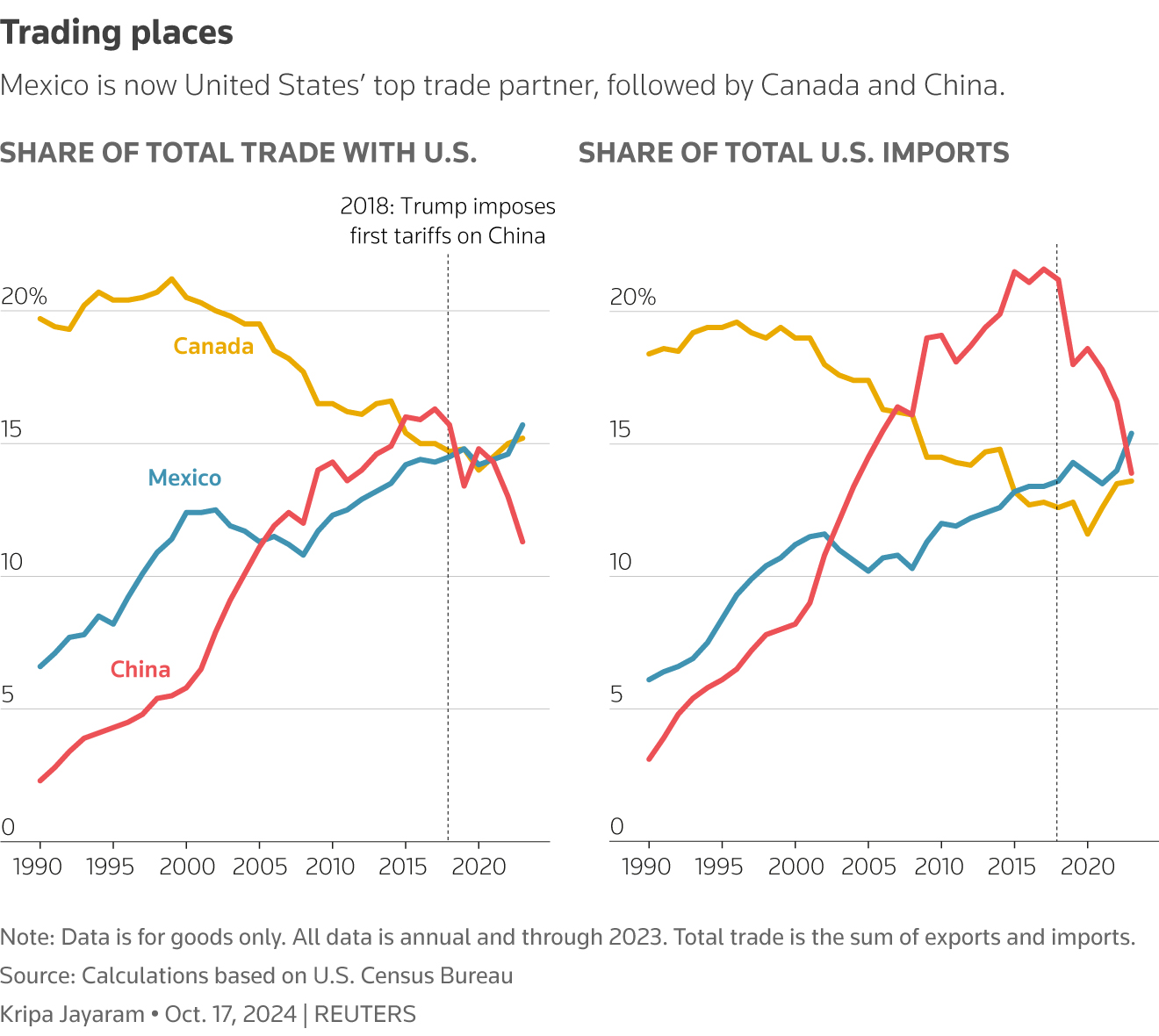

Posthaste Deciphering The Global Implications Of The Recent Tariff Decision For Canada

May 31, 2025

Posthaste Deciphering The Global Implications Of The Recent Tariff Decision For Canada

May 31, 2025 -

Table Tennis Worlds Wang Sun Secure Third Straight Mixed Doubles Gold

May 31, 2025

Table Tennis Worlds Wang Sun Secure Third Straight Mixed Doubles Gold

May 31, 2025 -

Arcachon Le Tip Top One Un Embleme Du Bassin Apres 22 Ans

May 31, 2025

Arcachon Le Tip Top One Un Embleme Du Bassin Apres 22 Ans

May 31, 2025 -

Canadian Wildfires Minnesota Air Quality Plummets

May 31, 2025

Canadian Wildfires Minnesota Air Quality Plummets

May 31, 2025 -

Showers Expected In Northeast Ohio On Election Day

May 31, 2025

Showers Expected In Northeast Ohio On Election Day

May 31, 2025