The Reality Of AI Learning: Navigating The Ethical Challenges

Table of Contents

Bias and Discrimination in AI Learning

AI systems learn from data, and if that data reflects existing societal biases, the AI will inevitably perpetuate and even amplify those biases. This is a critical ethical challenge in AI learning.

Algorithmic Bias

Algorithmic bias refers to systematic and repeatable errors in a computer system that create unfair outcomes, such as discrimination or prejudice, due to biases in the data or the algorithms themselves. This bias can manifest in various ways:

- Loan applications: AI systems used to assess loan applications might unfairly deny loans to applicants from certain demographic groups due to biased historical data.

- Facial recognition: Facial recognition systems have demonstrated biases, exhibiting higher error rates for individuals with darker skin tones.

- Hiring processes: AI-powered recruitment tools might inadvertently discriminate against candidates based on gender, race, or other protected characteristics.

The problem stems from the data used to train these algorithms. If the training data doesn't accurately represent the diversity of the population, the resulting AI system will likely reflect and amplify the biases present in that data. Creating truly fair and unbiased AI requires diverse and representative datasets reflecting the full spectrum of human experience. This is crucial for mitigating AI bias and promoting AI fairness.

Addressing Bias in AI Development

Mitigating bias in AI requires a multi-pronged approach encompassing various strategies throughout the AI development lifecycle:

- Fairness-aware algorithms: Developing algorithms specifically designed to minimize bias and ensure fair outcomes.

- Data augmentation: Enriching datasets with underrepresented groups to balance the data and reduce bias.

- Ongoing monitoring: Continuously monitoring AI systems for bias and adjusting them as needed.

Human oversight is paramount. Ethical guidelines and rigorous testing procedures are essential to ensure that AI systems are developed and deployed responsibly. Addressing AI bias is a continuous process demanding commitment to AI ethics and responsible AI practices.

Privacy Concerns and Data Security in AI Learning

The power of AI learning is inextricably linked to the vast amounts of data used to train these systems. This data often includes highly sensitive personal information, raising significant privacy and security concerns.

Data Collection and Usage

The collection and use of personal data for AI training raise several ethical questions:

- Informed consent: Do individuals truly understand how their data will be used and whether they have meaningful control over it?

- Data breaches: What measures are in place to protect this sensitive data from unauthorized access or misuse?

- Surveillance: Could the use of AI for data analysis lead to excessive surveillance and erosion of privacy?

Transparency in data practices is essential. Strong data protection regulations, such as GDPR and CCPA, are crucial for safeguarding user privacy and ensuring responsible data handling in the age of AI.

Protecting User Privacy in AI Applications

Several techniques can be employed to safeguard user privacy while leveraging the benefits of AI:

- Data anonymization: Removing identifying information from datasets before use.

- Differential privacy: Adding carefully calibrated noise to datasets to protect individual privacy while preserving data utility.

- Federated learning: Training AI models on decentralized data sources without directly transferring the data to a central server.

Balancing the need for data to train effective AI systems with the fundamental right to privacy is a crucial ethical challenge that demands innovative solutions and a commitment to privacy-preserving AI.

Accountability and Transparency in AI Learning

The complexity of many AI systems, often referred to as "black boxes," makes it difficult to understand how they arrive at their decisions. This lack of transparency raises significant concerns about accountability.

Explainability and Interpretability

Understanding how an AI system reaches a conclusion is vital for trust and accountability. The challenge lies in interpreting complex AI models:

- "Black box" problem: Many advanced AI models, like deep neural networks, are opaque, making it difficult to understand their internal workings.

Explainable AI (XAI) techniques aim to address this challenge by making AI models more interpretable and transparent. This is crucial for building trust and ensuring that AI systems are used responsibly.

Establishing Responsibility for AI Actions

When an AI system makes an error or causes harm, the question of accountability becomes complex:

- Legal and ethical implications: Who is responsible – the developers, the users, or the AI itself?

Clear guidelines and regulations for AI liability are necessary to address this challenge. Establishing responsibility for AI actions requires a careful consideration of AI governance and AI regulation.

Job Displacement and Economic Inequality due to AI Learning

The automation potential of AI raises concerns about job displacement and the exacerbation of economic inequality.

Automation and the Workforce

AI-driven automation has the potential to significantly impact employment across various sectors:

- Jobs at risk: Many jobs involving routine tasks are susceptible to automation.

- Reskilling and upskilling initiatives: Investing in programs that help workers adapt to the changing job market is crucial.

Mitigating job displacement requires proactive measures, such as reskilling and upskilling initiatives, and policies that support workers affected by automation.

Addressing Economic Inequality

Ensuring that the benefits of AI are shared broadly across society is paramount:

- Policies aimed at reducing income inequality: Implementing policies that redistribute wealth and create more equitable economic opportunities.

- Social safety nets: Strengthening social safety nets to provide support for those affected by job displacement.

Investing in education and training is crucial for preparing the workforce for the changing landscape of the future of work and promoting inclusive growth.

Conclusion

The ethical challenges associated with AI learning are multifaceted and demand careful consideration. Bias, privacy concerns, accountability issues, and the potential for economic inequality are all significant hurdles that must be addressed for the responsible development and deployment of AI. The importance of responsible AI development cannot be overstated. Ethical guidelines, regulations, and ongoing monitoring are crucial to ensure that AI systems are developed and used in a way that benefits all of humanity. Understanding the ethical implications of AI learning is crucial for its responsible development and deployment. Let's work together to navigate these challenges and ensure that AI benefits all of humanity. Continue exploring the complexities of AI learning and contribute to the ongoing conversation about its ethical future.

Featured Posts

-

Federal Investigation Into Nova Scotia Power Customer Data Breach

May 31, 2025

Federal Investigation Into Nova Scotia Power Customer Data Breach

May 31, 2025 -

Dragons Den The Illusion Of Reality

May 31, 2025

Dragons Den The Illusion Of Reality

May 31, 2025 -

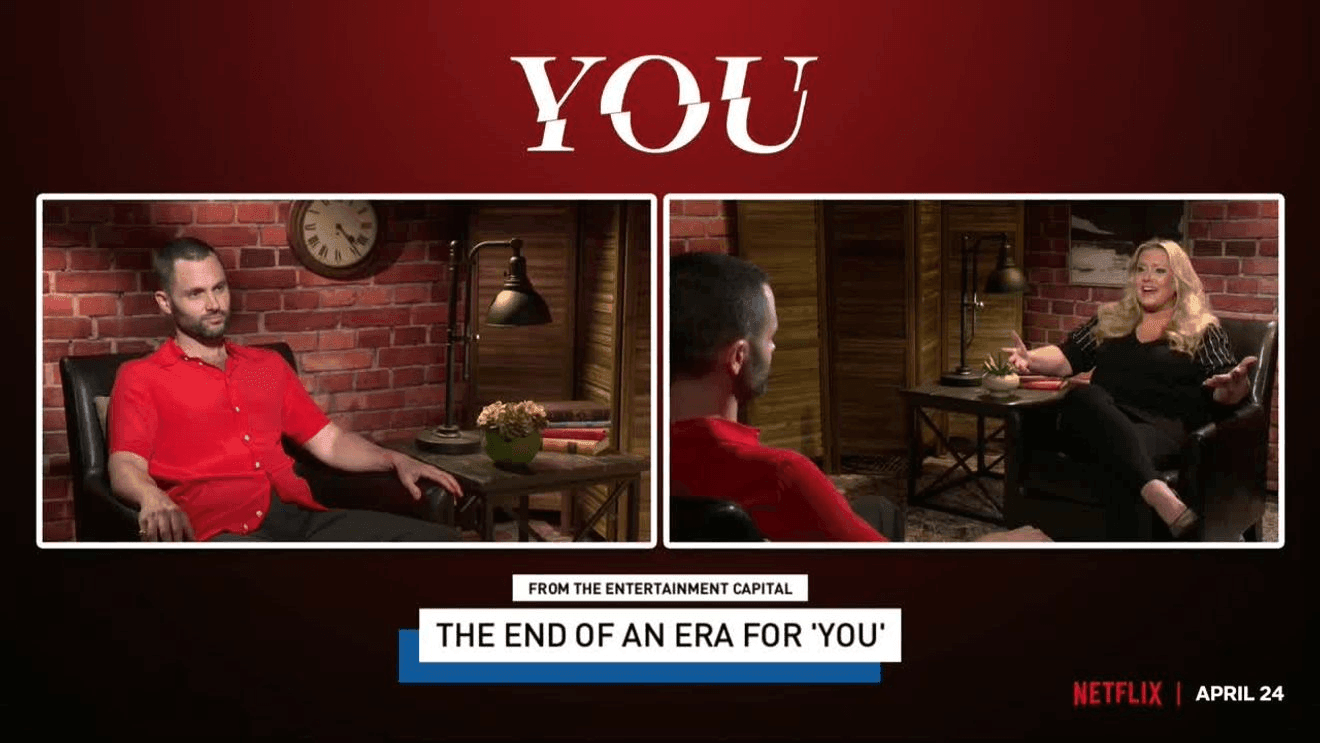

You Netflix Was The Final Season Worth The Wait

May 31, 2025

You Netflix Was The Final Season Worth The Wait

May 31, 2025 -

U S Court Ruling Strikes Down Trump Tariffs Impact On Businesses And Canada

May 31, 2025

U S Court Ruling Strikes Down Trump Tariffs Impact On Businesses And Canada

May 31, 2025 -

Carnaval D Ouistreham Le Programme Des Festivites Estivales

May 31, 2025

Carnaval D Ouistreham Le Programme Des Festivites Estivales

May 31, 2025