Understanding AI's Learning Process: A Path To Responsible AI

Table of Contents

Types of AI Learning

AI systems learn in various ways, each with its own strengths and weaknesses. Understanding these different approaches is fundamental to building effective and ethical AI.

Supervised Learning

In supervised learning, an AI model learns from labeled data. This means the data used for training includes both the input and the desired output. The algorithm identifies patterns in the data to map inputs to outputs. Think of it like a teacher providing examples and answers to a student.

- Requires a large, meticulously labeled dataset: The quality and quantity of this data directly impact the model's accuracy. Insufficient or poorly labeled data leads to inaccurate predictions.

- High accuracy (potentially): When trained on high-quality data, supervised learning models can achieve high accuracy. However, this accuracy is entirely dependent on the quality of the training data.

- Examples: Medical diagnosis (image analysis to detect diseases), spam filtering (classifying emails as spam or not spam), fraud detection (identifying suspicious transactions). These applications leverage the ability of supervised learning to accurately categorize and predict based on labeled examples.

Unsupervised Learning

Unlike supervised learning, unsupervised learning uses unlabeled data. The AI system identifies patterns, structures, and relationships in the data without any pre-defined output. This is more akin to exploratory data analysis where the AI seeks to uncover hidden insights.

- Discovers hidden structures and relationships: This makes it invaluable for tasks where the desired output isn't readily defined.

- Useful for exploratory data analysis: It helps to uncover hidden patterns and groupings within data, leading to valuable insights.

- Examples: Customer segmentation (grouping customers based on their purchasing behavior), anomaly detection (identifying unusual events or data points), recommendation systems (suggesting products or services based on user preferences). These examples demonstrate how unsupervised learning can reveal valuable information from raw data.

Reinforcement Learning

Reinforcement learning involves an AI agent interacting with an environment, learning through trial and error. The agent receives rewards for correct actions and penalties for incorrect ones. This iterative process allows the AI to optimize its behavior over time.

- Agent interacts with an environment: This interaction is key to the learning process. The agent learns by experiencing the consequences of its actions.

- Learns through feedback and optimization: The reward system guides the agent towards optimal strategies.

- Examples: Self-driving cars (learning to navigate roads and traffic), game playing (mastering complex games like chess or Go), resource management (optimizing resource allocation in complex systems). Reinforcement learning enables AI to master complex tasks through continuous interaction and adaptation.

Data Bias and its Impact on AI Learning

The data used to train AI models significantly impacts their behavior. Bias in this data can lead to unfair or discriminatory outcomes, highlighting the critical role of data quality and ethical considerations in AI development.

Sources of Bias

Bias can creep into training data from numerous sources, leading to skewed and potentially harmful results. Understanding these sources is critical for building fairer AI systems.

- Historical biases reflected in data: Data often reflects existing societal biases, perpetuating and amplifying them in AI models.

- Sampling bias and representation issues: If the training data doesn't adequately represent all groups, the AI may perform poorly or unfairly for underrepresented populations.

- Impact on fairness and equity: Biased AI can lead to unfair or discriminatory outcomes in areas like loan applications, hiring processes, and even criminal justice.

Mitigating Bias

Addressing bias requires a multifaceted approach involving careful data handling and algorithmic adjustments.

- Careful data curation and preprocessing: This includes identifying and correcting biases in the data before training the model.

- Algorithmic fairness techniques: Employing algorithms designed to minimize bias and promote fairness in AI decision-making.

- Regular auditing and monitoring: Continuously evaluating AI systems for bias and adjusting them as needed. This ongoing monitoring is crucial for maintaining fairness and preventing the perpetuation of biases.

Ensuring Transparency and Explainability in AI

Many AI models, especially deep learning models, are often described as "black boxes," meaning their decision-making processes are opaque and difficult to understand. This lack of transparency raises concerns about trust, accountability, and ethical implications.

The Black Box Problem

The complexity of some AI models makes it challenging to understand how they arrive at their conclusions.

- Difficulty interpreting decision-making processes: This opacity makes it hard to identify and correct errors or biases.

- Lack of transparency can hinder trust and accountability: It’s difficult to hold AI systems accountable if their decision-making processes are unclear.

- Importance of explainable AI (XAI): The field of XAI focuses on developing techniques to make AI models more interpretable and transparent.

Techniques for Explainable AI

Several methods aim to improve the transparency and explainability of AI models.

- Feature importance analysis: Identifying which input features most strongly influence the model's output.

- Rule extraction techniques: Extracting understandable rules from the model that explain its decision-making process.

- Visualizations and interactive explanations: Creating visualizations and interactive tools to help users understand how the AI model works.

Conclusion

Understanding the AI learning process is paramount for creating responsible and ethical AI systems. By acknowledging the different learning methods, addressing data bias, and striving for transparency and explainability, we can harness the power of AI while mitigating potential risks. The journey towards responsible AI requires continuous learning and adaptation, focusing on ethical considerations throughout the entire AI development lifecycle. Let's work together to ensure the ethical development and deployment of AI, understanding the crucial role of the AI learning process in shaping a better future. Learn more about building responsible AI systems today!

Featured Posts

-

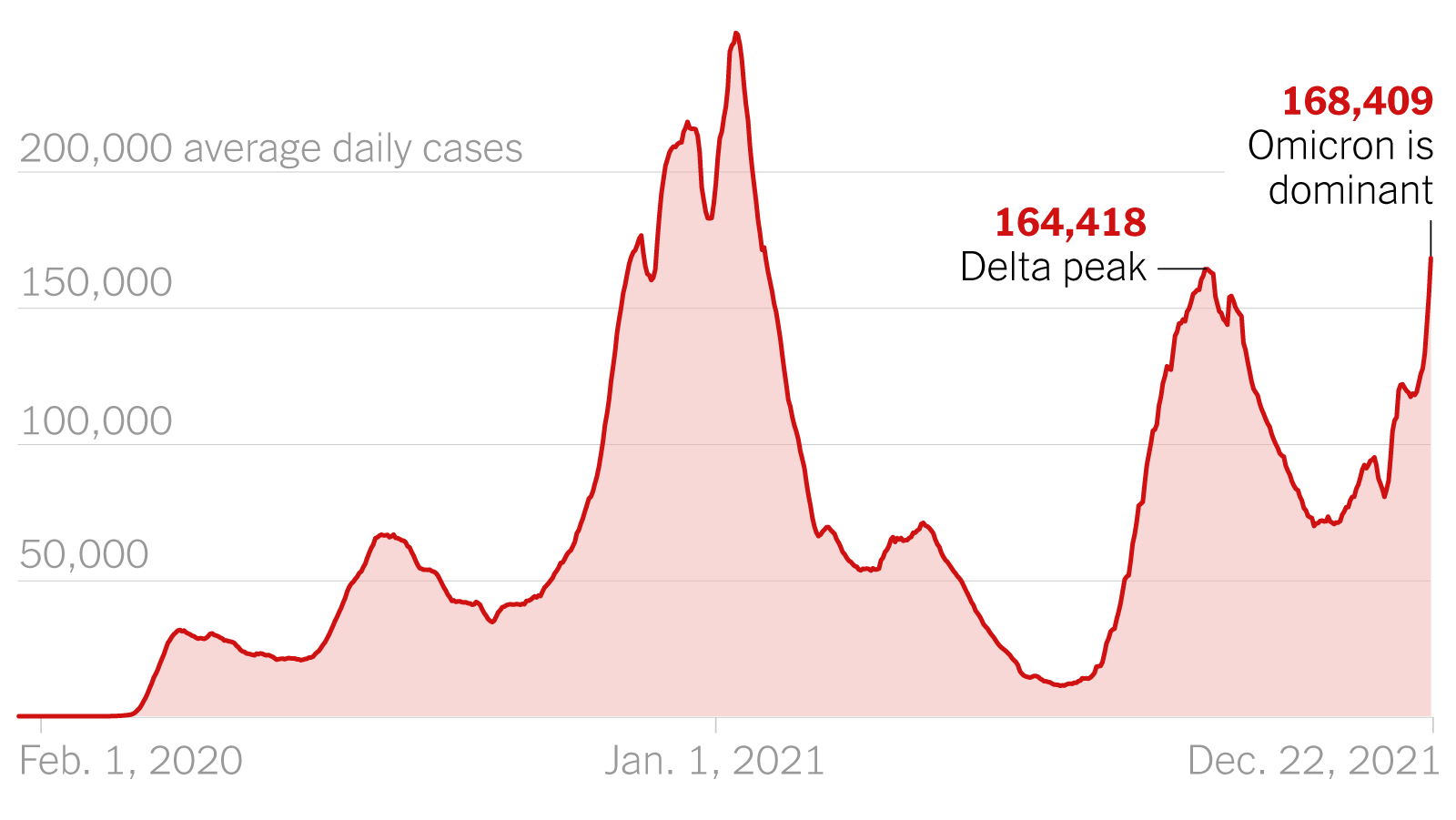

Analysis The Link Between A New Covid 19 Variant And Increased Infections

May 31, 2025

Analysis The Link Between A New Covid 19 Variant And Increased Infections

May 31, 2025 -

Isabelle Autissier L Urgence D Une Union Face Aux Defis Environnementaux

May 31, 2025

Isabelle Autissier L Urgence D Une Union Face Aux Defis Environnementaux

May 31, 2025 -

Mel Kiper Jr On The Browns No 2 Pick Who Will They Draft

May 31, 2025

Mel Kiper Jr On The Browns No 2 Pick Who Will They Draft

May 31, 2025 -

Jaime Munguia Issues Statement On Recent Drug Test Findings

May 31, 2025

Jaime Munguia Issues Statement On Recent Drug Test Findings

May 31, 2025 -

Veterinary Watchdogs Separating Hype From Reality

May 31, 2025

Veterinary Watchdogs Separating Hype From Reality

May 31, 2025