Why AI Doesn't "Learn" And How This Impacts Responsible AI Practices

Table of Contents

The Illusion of Learning: How AI Systems Operate

AI systems don't learn in the human sense; they don't possess consciousness or understanding. Instead, they excel at identifying patterns within vast datasets. This pattern recognition is achieved through sophisticated statistical modeling and various machine learning algorithms, including deep learning and reinforcement learning. These algorithms analyze data, identify correlations, and extrapolate from existing information to make predictions or decisions. However, this process is fundamentally different from human learning.

Let's contrast human learning with AI's pattern recognition capabilities:

- Humans learn through understanding, reasoning, and context. We connect new information to existing knowledge, form concepts, and draw inferences based on a wealth of experiences and perspectives.

- AI identifies correlations and extrapolates from existing data. It lacks the capacity for true understanding and often struggles with novel situations or contexts not represented in its training data.

- Humans adapt to novel situations; AI requires retraining for new data. To handle unforeseen circumstances, humans can adjust their approach and apply existing knowledge creatively. AI, on the other hand, typically needs to be retrained with new data to adapt.

Data Bias and its Impact on AI "Learning"

A significant challenge in AI development is the pervasive issue of data bias. Biased data leads to biased outputs, perpetuating and even amplifying existing societal inequalities. This algorithmic bias arises from various sources, impacting the fairness and trustworthiness of AI systems. Keywords like "algorithmic bias," "data bias mitigation," and "fairness in AI" are central to addressing this critical problem.

Examples of biased AI systems and their consequences include:

- Facial recognition systems demonstrating higher error rates for individuals with darker skin tones.

- Loan application algorithms discriminating against certain demographic groups.

- AI-powered hiring tools exhibiting gender or racial bias.

Addressing data bias is complex:

- Sources of data bias: historical data reflecting past prejudices, flawed sampling methods, and errors in human annotation of data.

- Techniques for detecting data bias: statistical analysis to identify disparities in data representation and the use of fairness metrics to assess the potential for bias in AI outputs.

- Strategies for mitigating bias: data augmentation to balance datasets, algorithmic adjustments to address biases in model training, and careful selection and preprocessing of data.

The Importance of Human Oversight in Responsible AI

Human intervention is paramount throughout the entire AI lifecycle – from design and development to deployment and monitoring. This "human-in-the-loop" approach is vital for ensuring responsible AI practices. Transparency and accountability are key aspects of ethical AI development, requiring explainable AI (XAI) techniques to make AI decision-making more understandable. Furthermore, ethical considerations such as privacy, security, and the potential for misuse must be addressed.

Implementing responsible AI requires:

- Establishing clear ethical guidelines for AI development and deployment.

- Implementing rigorous testing and validation procedures to identify and mitigate bias and other potential issues.

- Creating mechanisms for ongoing monitoring and auditing of AI systems to ensure they continue to operate ethically and effectively.

- Developing methods for explaining AI decisions (XAI) to increase transparency and build trust.

The Future of Responsible AI and "Learning"

The field of AI is rapidly evolving, with increasing focus on improving AI safety and trustworthiness. Keywords like "robust AI," "safe AI," and "trustworthy AI" encapsulate the direction of current research. Advancements in XAI and new methods for mitigating bias are crucial.

Future directions in responsible AI include:

- Developing more robust and verifiable AI algorithms that are less susceptible to manipulation or unexpected behavior.

- Increased investment in AI safety and security research to prevent malicious use and mitigate unintended consequences.

- Integrating human values and ethical principles into the design and development of AI systems from the outset.

Conclusion: Rethinking "Learning" in the Context of Responsible AI Practices

AI does not learn like humans; its "learning" is a sophisticated form of pattern recognition from data. Data bias significantly impacts AI outcomes, leading to unfair or discriminatory results. Crucially, human oversight is essential for responsible AI practices. By understanding these limitations and embracing responsible AI practices, we can harness the power of AI for good while mitigating potential harms. Learn more about building a more ethical and responsible AI future today!

Featured Posts

-

Investigation Could Price Caps And Comparison Sites Improve Vet Costs

May 31, 2025

Investigation Could Price Caps And Comparison Sites Improve Vet Costs

May 31, 2025 -

Estevan Announces Complete Street Sweeping Schedule

May 31, 2025

Estevan Announces Complete Street Sweeping Schedule

May 31, 2025 -

Banksy Artwork Makes Debut In Dubai

May 31, 2025

Banksy Artwork Makes Debut In Dubai

May 31, 2025 -

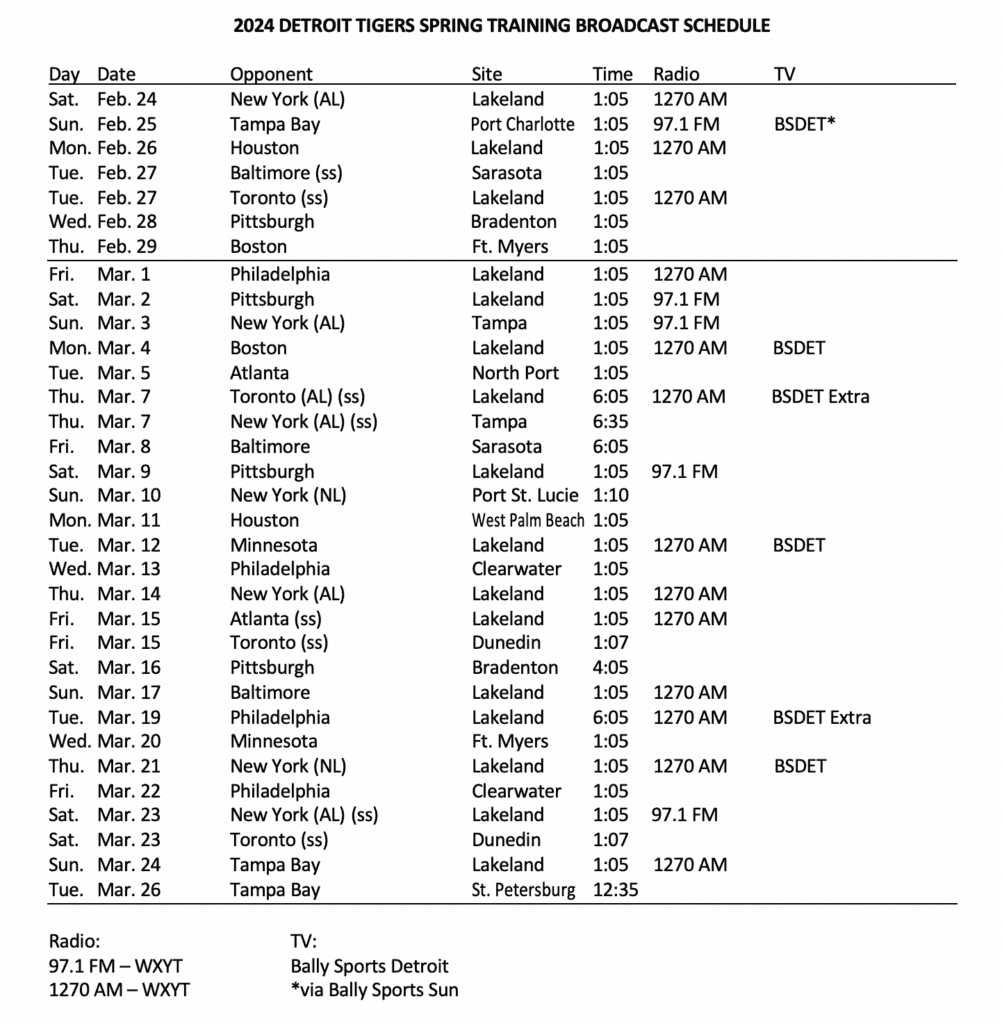

Jack White Joins Detroit Tigers Broadcast Hall Of Fame Discussion And Baseball Talk

May 31, 2025

Jack White Joins Detroit Tigers Broadcast Hall Of Fame Discussion And Baseball Talk

May 31, 2025 -

New Residents Welcome Two Weeks Free Accommodation In Germany

May 31, 2025

New Residents Welcome Two Weeks Free Accommodation In Germany

May 31, 2025