Why AI Doesn't Truly Learn: A Guide To Ethical AI Development And Deployment

Table of Contents

The Illusion of Learning: How AI Works

A key distinction exists between human learning and the way AI, specifically Machine Learning (ML) and Deep Learning (DL), operates. Humans learn through understanding context, meaning, and nuanced relationships. AI, on the other hand, relies on statistical pattern recognition. It identifies correlations in vast datasets but lacks the genuine comprehension that fuels human learning. This difference is crucial to understanding AI's limitations.

- AI learns from data, not understanding: AI systems process enormous amounts of data to identify patterns, but this process lacks the inherent understanding of meaning and context present in human learning. They are essentially sophisticated pattern-matching machines.

- AI identifies patterns, not concepts: While AI can excel at identifying patterns in data, it struggles to grasp the underlying concepts those patterns represent. This limitation restricts its ability to generalize knowledge across different contexts.

- AI lacks generalizability: An AI trained to identify cats in images might struggle to recognize a cat in a video or a cartoon rendering. This lack of generalizability is a significant limitation compared to human learning, where knowledge can often be applied flexibly to new situations.

- AI is heavily reliant on the quality and quantity of training data: The performance of AI systems is directly tied to the quality and quantity of data used to train them. Biased or incomplete data will lead to biased or inaccurate results. Garbage in, garbage out, is a particularly apt maxim in AI.

- Overfitting: A significant challenge in AI is overfitting. This occurs when an AI model learns the training data too well, performing exceptionally on that specific data but poorly on new, unseen data. This limits its real-world applicability and highlights the limitations of its "learning" process.

The Ethical Implications of Unethical AI

The limitations of AI create significant ethical challenges. The lack of genuine understanding and the reliance on data introduce risks of bias, lack of transparency, and potential misuse.

- Biased training data leads to biased AI outputs, perpetuating societal inequalities: AI systems trained on biased data will inevitably produce biased outputs, reflecting and even amplifying existing societal inequalities. For instance, facial recognition systems trained primarily on images of white faces have demonstrated significantly lower accuracy rates for people of color.

- The "black box" nature of many AI systems makes it difficult to understand their decision-making process, hindering accountability: Many complex AI systems, particularly deep learning models, function as "black boxes." Their decision-making processes are opaque, making it challenging to understand why a particular outcome was reached. This lack of transparency raises serious concerns about accountability and trust.

- The potential for AI to be used for malicious purposes (e.g., autonomous weapons, surveillance) raises significant ethical concerns: The power of AI can be misused. The development of autonomous weapons systems and the potential for widespread surveillance raise serious ethical and societal concerns. Responsible development and deployment are crucial to mitigate these risks.

- Explainable AI (XAI): The development of Explainable AI (XAI) aims to address the transparency issue. XAI focuses on creating AI systems whose decision-making processes are more readily understandable, enhancing accountability and trust.

Building Responsible and Ethical AI Systems

Developing and deploying ethical AI requires a proactive approach that prioritizes transparency, accountability, and fairness.

- Ensuring diverse and representative datasets minimizes bias: Careful curation of training data is essential to minimize bias. Datasets should be diverse and representative of the populations they will impact.

- Implementing explainable AI (XAI) techniques to understand AI's decision-making process: XAI techniques can help make AI systems more transparent and accountable, allowing us to understand the reasoning behind their decisions.

- Establishing clear guidelines and regulations for AI development and deployment: Governments and organizations need to establish clear guidelines and regulations to govern the development and deployment of AI, ensuring ethical considerations are prioritized.

- Continuous monitoring and evaluation of AI systems to detect and mitigate unintended consequences: AI systems should be continuously monitored and evaluated to identify and address any unintended consequences or biases that may emerge.

- The importance of human oversight in AI systems: Human oversight is crucial to ensure that AI systems are used responsibly and ethically. Humans should retain ultimate control over AI decisions, especially in high-stakes situations.

The Role of Human-in-the-Loop Systems

Human-in-the-loop systems are crucial for responsible AI. These systems incorporate human oversight and feedback into the AI's decision-making process, improving reliability and addressing ethical concerns. Collaborative AI, where humans and AI work together, leverages the strengths of both, improving outcomes and mitigating risks.

Conclusion

Current AI systems, while impressive, do not truly "learn" in the same way humans do. Their reliance on statistical patterns, susceptibility to bias, and lack of inherent understanding necessitate a strong emphasis on ethical considerations throughout the entire AI lifecycle – from data collection and algorithm design to deployment and ongoing monitoring. The challenges of bias, transparency, and potential misuse highlight the urgent need for responsible AI development. By prioritizing ethical AI development and deployment, we can harness the power of AI while mitigating its risks and ensuring it benefits all of humanity. Understanding the limitations of AI is crucial for building a future where AI benefits humanity. By prioritizing ethical considerations throughout the AI lifecycle, we can strive towards creating truly beneficial and responsible AI systems. Let's work together to promote ethical AI development and deployment.

Featured Posts

-

Houstons Rat Problem A Drug Addiction Crisis

May 31, 2025

Houstons Rat Problem A Drug Addiction Crisis

May 31, 2025 -

Increased Rent In Los Angeles After Recent Fires A Growing Concern

May 31, 2025

Increased Rent In Los Angeles After Recent Fires A Growing Concern

May 31, 2025 -

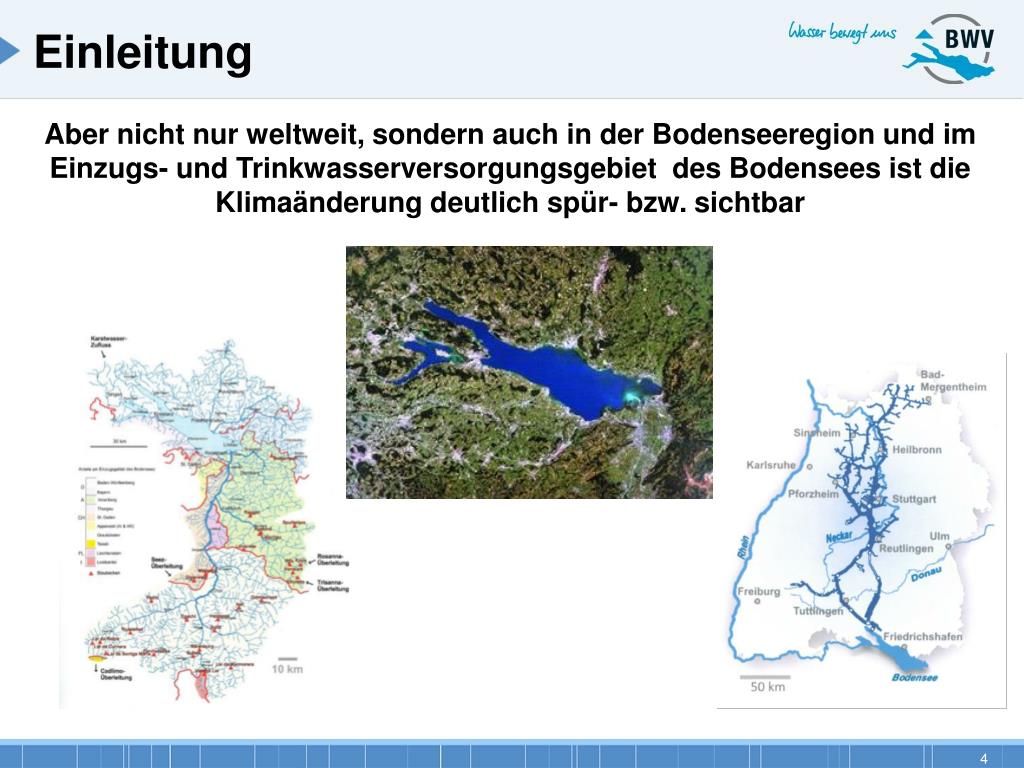

Verschwindet Der Bodensee Die Folgen Des Klimawandels Fuer Die Bodenseeregion

May 31, 2025

Verschwindet Der Bodensee Die Folgen Des Klimawandels Fuer Die Bodenseeregion

May 31, 2025 -

Exploring Bernard Keriks Family Hala Matli And His Children

May 31, 2025

Exploring Bernard Keriks Family Hala Matli And His Children

May 31, 2025 -

New Covid 19 Variant Driving Up Infections According To Who

May 31, 2025

New Covid 19 Variant Driving Up Infections According To Who

May 31, 2025