Why AI Doesn't Truly Learn And How To Use It Responsibly

Table of Contents

The Illusion of AI Learning

AI excels at pattern recognition and prediction, often creating the illusion of true understanding. However, its processes are fundamentally different from human learning. Instead of genuine comprehension, AI relies on sophisticated algorithms that analyze vast datasets to identify statistical relationships and extrapolate patterns.

- Machine learning algorithms primarily use statistical analysis to find correlations in data.

- Deep learning models employ artificial neural networks with multiple layers to process complex data, identifying intricate patterns.

- This pattern matching allows AI to predict outcomes based on past data, mimicking learning but without the underlying comprehension.

For instance, an AI trained to identify cats in images might excel at this task, even with variations in lighting and angle. However, this "learning" is limited to the data it was trained on. Present it with a novel image—a cat camouflaged in leaves, for example—and its performance might drastically decrease. This highlights the critical limitation of current AI models: a lack of genuine generalization and the inability to handle truly ambiguous situations. The AI is extrapolating from known patterns, not truly understanding the concept of "cat." This difference between pattern recognition and true understanding is key to grasping the limitations of AI learning.

How AI "Learns": Data Dependency and Bias

AI's "learning" is profoundly dependent on the data used to train it. The quality, quantity, and, crucially, the bias of this training data directly impact the AI's performance and potential for harm. Algorithmic bias stems from biases present in the data, leading to unfair or discriminatory outcomes.

- Training data acts as the foundation upon which AI models are built. Biased data leads to biased algorithms.

- For example, a facial recognition system trained predominantly on images of light-skinned individuals may perform poorly when identifying individuals with darker skin tones.

- This bias has significant consequences in various applications: hiring processes, loan applications, and even criminal justice systems, where biased AI could perpetuate existing inequalities.

The ethical implications of data bias are profound, demanding rigorous scrutiny and mitigation strategies in responsible AI development. Understanding and addressing data bias is paramount for ensuring fairness and promoting ethical AI.

The Absence of Contextual Understanding and Common Sense

A significant difference between human and AI learning lies in contextual understanding and common sense reasoning. Humans effortlessly incorporate context and prior knowledge into their learning, enabling them to navigate complex situations and apply knowledge flexibly. AI currently lacks this crucial ability.

- Human learning involves building complex mental models incorporating context, experience, and emotion.

- AI learning, on the other hand, is primarily pattern-based, lacking the capacity for nuanced contextual understanding or common-sense reasoning.

Consider an AI tasked with understanding a sentence like, "The bat flew out of the cave." A human immediately understands the context—a flying mammal, not a baseball bat. An AI, lacking common sense, might struggle with disambiguation, potentially leading to incorrect interpretations. This lack of contextual understanding and symbolic reasoning highlights a major limitation of current AI, leaving a significant gap between artificial intelligence and artificial general intelligence (AGI).

Responsible AI Use: Mitigation Strategies

To mitigate the risks associated with biased or limited AI systems, responsible AI development and deployment are critical. This involves implementing strategies to enhance fairness, transparency, and accountability.

- Careful data curation and bias detection: Employing techniques to identify and mitigate biases in training data is crucial.

- Transparency and explainability of AI algorithms (XAI): Understanding how an AI reaches its conclusions is vital for identifying and addressing potential biases.

- Human oversight and intervention: Maintaining human control over AI systems, allowing for intervention when necessary, is essential.

- Continuous monitoring and evaluation of AI performance: Regularly assessing AI performance and adapting models based on feedback can help to identify and correct biases or errors. AI auditing plays a significant role here.

By adhering to these principles and fostering ethical AI principles, we can strive for greater fairness and safety in AI applications. AI governance structures and regulations are increasingly important as AI becomes more integrated into our lives.

Conclusion: Understanding the Limits of AI Learning

AI's "learning" is fundamentally different from human learning, relying heavily on data and lacking true understanding and common sense. While AI offers incredible potential, its limitations demand a responsible approach to development and deployment. Ethical AI principles, such as fairness, transparency, and accountability, should guide every stage of the AI lifecycle. By understanding the limitations of AI learning and embracing responsible AI practices, we can harness the power of artificial intelligence while mitigating its inherent risks. Let's proactively shape the future of AI learning, ensuring it serves humanity ethically and beneficially. Learn more about responsible AI development and advocate for ethical considerations in this rapidly evolving field.

Featured Posts

-

Spring 2024 Uncanny Resemblance To 1968 And Its Implications For Summer Drought

May 31, 2025

Spring 2024 Uncanny Resemblance To 1968 And Its Implications For Summer Drought

May 31, 2025 -

Munguia Vs Surace Ii Munguia Secures Points Decision In Riyadh

May 31, 2025

Munguia Vs Surace Ii Munguia Secures Points Decision In Riyadh

May 31, 2025 -

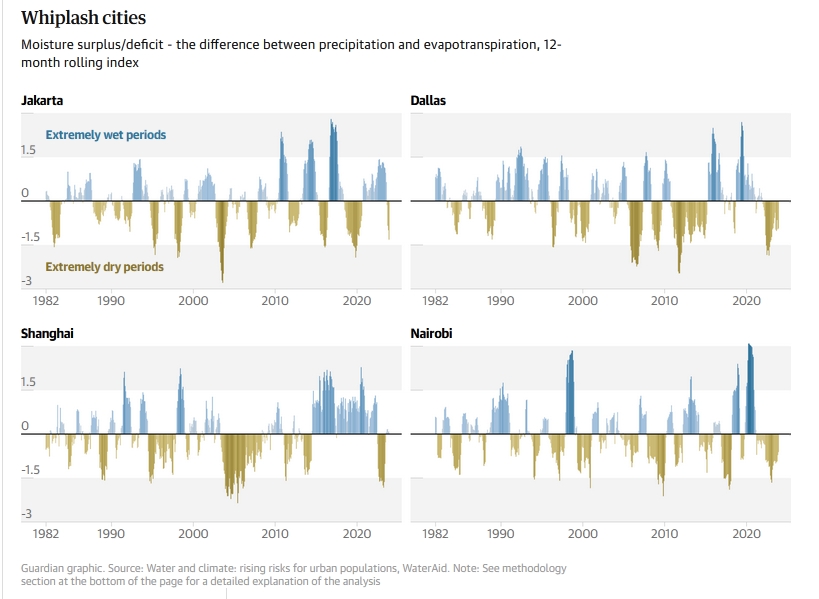

Dangerous Climate Whiplash A Growing Threat To Cities Around The World

May 31, 2025

Dangerous Climate Whiplash A Growing Threat To Cities Around The World

May 31, 2025 -

Former Nypd Commissioner Bernard Kerik Hospitalized Full Recovery Expected

May 31, 2025

Former Nypd Commissioner Bernard Kerik Hospitalized Full Recovery Expected

May 31, 2025 -

How To Lose Your Mother Molly Jongs Memoir Explained Briefly

May 31, 2025

How To Lose Your Mother Molly Jongs Memoir Explained Briefly

May 31, 2025