AI Therapy: Surveillance In A Police State?

Table of Contents

The Allure and Accessibility of AI Therapy

AI therapy holds significant appeal due to its potential to revolutionize access to mental healthcare.

Cost-Effectiveness and Accessibility

Traditional therapy can be prohibitively expensive and geographically limited. AI therapy offers a potential solution:

- Lower Costs: AI-powered platforms often have significantly lower costs compared to in-person therapy sessions, making mental healthcare more affordable.

- 24/7 Availability: Unlike human therapists, AI therapy platforms are available around the clock, offering support whenever needed. This is particularly beneficial for individuals experiencing crises or those in remote areas with limited access to healthcare professionals.

- Anonymity: For individuals who feel uncomfortable disclosing personal information in traditional settings, the anonymity offered by some AI platforms can be a significant advantage. This increased accessibility opens up affordable mental health solutions for many who previously lacked access to care. The benefits of this form of remote therapy extend to individuals with mobility issues or those in rural communities underserved by traditional healthcare.

These factors contribute to making AI-powered mental health tools a vital resource in addressing the global mental health crisis.

Personalized Treatment Plans

AI's ability to analyze vast datasets allows for the creation of highly personalized treatment plans.

- Personalized Feedback: AI systems can provide tailored feedback and adjust treatment strategies based on individual responses and progress.

- Adaptive Algorithms: These algorithms learn from each interaction, constantly refining their approach to optimize treatment outcomes.

- Data-Driven Insights: AI can analyze patient data to identify patterns and predict potential issues, allowing for proactive interventions.

- Improved Treatment Outcomes: By leveraging data analytics in healthcare, AI has the potential to improve treatment efficacy and reduce relapse rates. The promise of precision mental health using AI-driven diagnostics is a significant driver of its adoption.

The Surveillance Risks of AI Therapy

While promising, the integration of AI into mental healthcare raises significant concerns about surveillance and potential misuse.

Data Privacy and Security

Sensitive mental health data is highly vulnerable to breaches and misuse.

- Potential for Data Leaks: AI platforms, like any digital system, are susceptible to hacking and data breaches, exposing confidential patient information.

- Lack of Robust Security Measures: The implementation of robust security measures is critical but often lags behind technological advancements, increasing the risk of unauthorized access.

- Unauthorized Access: Weak security protocols can lead to unauthorized access to personal data, potentially resulting in identity theft or other forms of harm.

- Use of Data for Purposes Other Than Treatment: Concerns exist that data collected through AI therapy platforms could be used for purposes unrelated to clinical care, violating patient trust and confidentiality. HIPAA compliance and GDPR compliance are paramount in addressing these concerns.

Algorithmic Bias and Discrimination

AI algorithms can perpetuate existing societal biases, leading to unfair or discriminatory outcomes.

- Biased Datasets: If the datasets used to train AI algorithms are biased, the resulting AI systems will likely reflect and amplify those biases, leading to unequal access to care.

- Lack of Diversity in AI Development Teams: A lack of diversity among AI developers can contribute to biased algorithms, as the developers may not fully understand the needs and experiences of diverse populations.

- Algorithmic Discrimination: This can manifest as biased diagnoses, inappropriate treatment recommendations, or unequal access to care based on factors such as race, gender, or socioeconomic status.

- Unequal Access to Care: AI systems designed with inherent biases can exacerbate existing health disparities, creating further inequalities in access to quality mental healthcare. Fairness in AI and ethical AI considerations are crucial to address this.

Potential for State Surveillance

The potential for government misuse of AI therapy data is a significant concern.

- Data Sharing with Government Agencies: There's a risk that patient data could be shared with government agencies without proper consent or oversight.

- Potential for Profiling and Surveillance: This information could be used for profiling and surveillance purposes, potentially violating individual privacy and civil liberties.

- Erosion of Patient Confidentiality: The use of AI therapy data for purposes beyond clinical care could erode the trust essential for effective mental healthcare.

- Implications for Civil Liberties: The unchecked use of AI in mental healthcare could lead to a significant erosion of civil liberties in an increasingly technologically advanced society, potentially creating a police state where technology is used to monitor and control citizens. The implications for digital rights and the safeguarding of civil liberties cannot be overlooked.

Mitigating the Risks and Ensuring Ethical Use

Addressing the risks associated with AI therapy requires proactive measures.

Robust Data Protection Regulations

Stronger laws and regulations are crucial for protecting patient data privacy.

- Stricter Data Encryption: Implementing strong encryption standards is vital to prevent unauthorized access to sensitive information.

- Enhanced Security Protocols: Regular security audits and updates are needed to identify and address vulnerabilities.

- Clear Guidelines on Data Sharing: Clear and transparent guidelines should be established regarding the sharing of patient data with third parties.

- Penalties for Misuse: Robust penalties for the misuse of patient data are needed to deter unethical behavior. Data protection laws and privacy regulations must evolve to keep pace with the rapid developments in AI.

Transparency and Accountability in AI Development

Transparency and accountability are essential for responsible AI development.

- Explainable AI (XAI): AI systems should be designed to be transparent and explainable, allowing users and regulators to understand how decisions are made.

- Independent Audits of AI Systems: Regular independent audits are needed to ensure that AI systems are functioning ethically and effectively.

- Ethical Guidelines for AI Developers: Clear ethical guidelines should be established for AI developers to ensure responsible development and deployment.

- Public Oversight: Public oversight mechanisms are crucial to ensure that AI systems are used responsibly and ethically. Responsible innovation and ethical AI development must be prioritized.

Patient Empowerment and Informed Consent

Patient empowerment and informed consent are fundamental to ethical AI therapy.

- Clear Communication About Data Usage: Patients must be clearly informed about how their data will be used and protected.

- Right to Data Access and Deletion: Patients should have the right to access and delete their data at any time.

- Patient Autonomy: Patients must retain control over their data and treatment decisions.

- Data Ownership: The question of data ownership should be clearly defined and addressed, ensuring patient autonomy. Patient rights and informed consent are crucial elements for ensuring ethical implementation.

Conclusion

AI therapy offers tremendous potential for improving mental healthcare access and personalizing treatment. However, the potential for surveillance and misuse of sensitive data necessitates a cautious and ethically responsible approach. We must prioritize robust data protection regulations, transparency in AI development, and patient empowerment to prevent the dystopian scenario where AI-powered mental healthcare becomes a tool for oppression rather than healing. We urge readers to engage in informed discussions, advocate for policies that protect patient privacy, and contact their representatives to express their concerns about the responsible development and implementation of AI therapy applications. The future of AI-powered mental healthcare hinges on our collective commitment to safeguarding individual liberties and ensuring ethical technological advancements.

Featured Posts

-

Shohei Ohtani Home Run Celebration Highlights Teamwork And Camaraderie

May 15, 2025

Shohei Ohtani Home Run Celebration Highlights Teamwork And Camaraderie

May 15, 2025 -

2023 Warner Robins Murder Case Jury Delivers Verdict

May 15, 2025

2023 Warner Robins Murder Case Jury Delivers Verdict

May 15, 2025 -

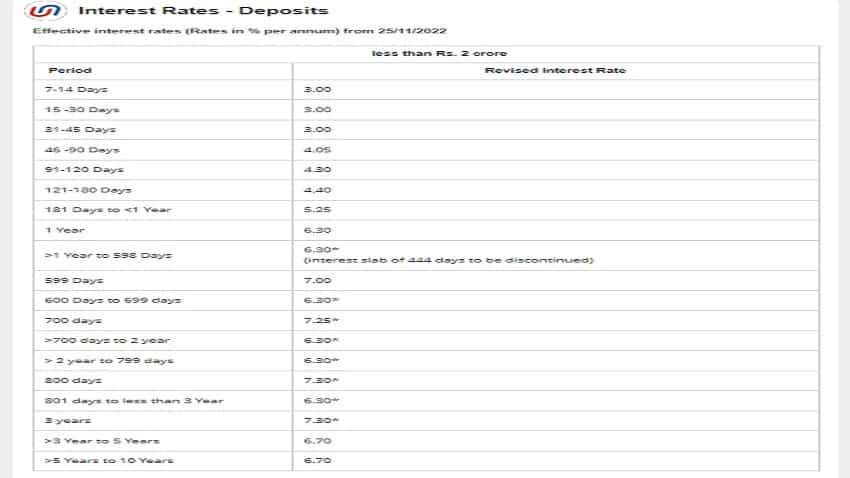

Indian Bank Fixed Deposit

May 15, 2025

Indian Bank Fixed Deposit

May 15, 2025 -

Padres 2025 Regular Season Broadcast Schedule Where To Watch And Listen

May 15, 2025

Padres 2025 Regular Season Broadcast Schedule Where To Watch And Listen

May 15, 2025 -

Navigating Crypto Exchange Regulations In India Your 2025 Compliance Checklist

May 15, 2025

Navigating Crypto Exchange Regulations In India Your 2025 Compliance Checklist

May 15, 2025

Latest Posts

-

First Up Bangladesh Yunus In China Rubios Caribbean Trip And More Top News

May 15, 2025

First Up Bangladesh Yunus In China Rubios Caribbean Trip And More Top News

May 15, 2025 -

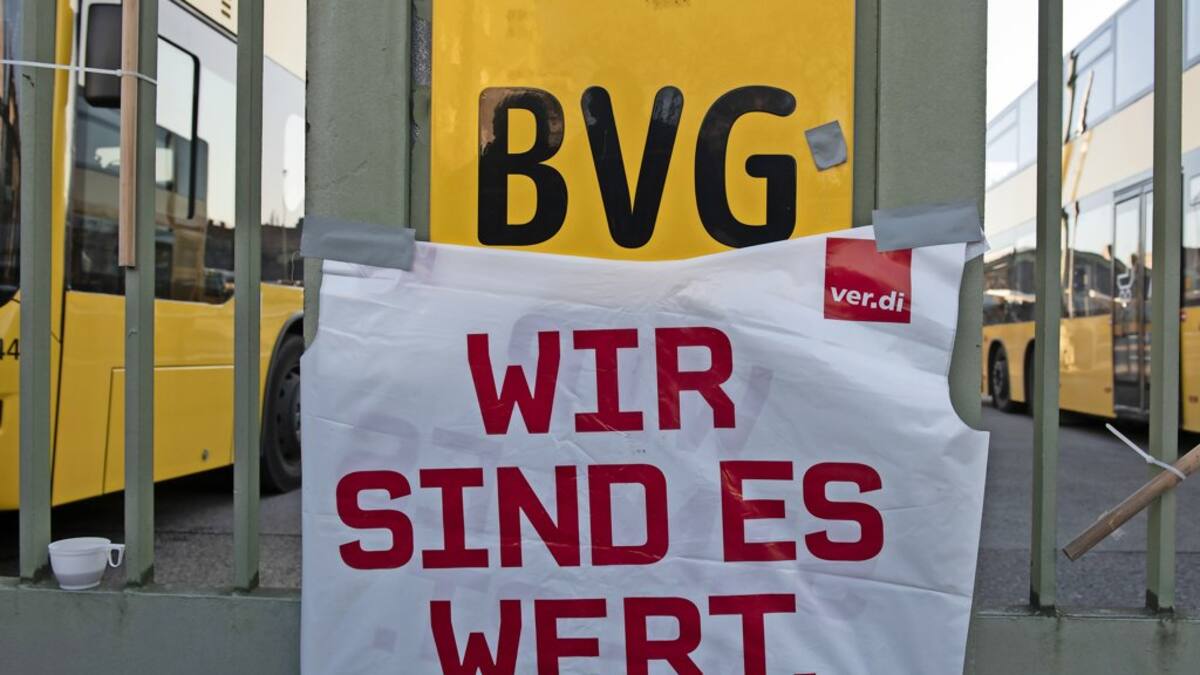

Endgueltige Einigung Im Bvg Tarifstreit Keine Streiks Mehr

May 15, 2025

Endgueltige Einigung Im Bvg Tarifstreit Keine Streiks Mehr

May 15, 2025 -

Berlin Kind Von Betrunkenen Mit Antisemitischen Parolen Angegriffen

May 15, 2025

Berlin Kind Von Betrunkenen Mit Antisemitischen Parolen Angegriffen

May 15, 2025 -

S Bahn And Bvg Update Strike Resolved Service Recovery Underway

May 15, 2025

S Bahn And Bvg Update Strike Resolved Service Recovery Underway

May 15, 2025 -

Berlin Public Transport Bvg Strike Concludes S Bahn Delays Continue

May 15, 2025

Berlin Public Transport Bvg Strike Concludes S Bahn Delays Continue

May 15, 2025