Investigation: Chicago Sun-Times Publishes False AI Information

Table of Contents

Dissecting the Chicago Sun-Times' AI Reporting Fiasco

The Chicago Sun-Times, a respected newspaper with a long history, found itself embroiled in controversy when it published an article containing significant factual inaccuracies. While the exact details surrounding the incident may vary depending on the source, the core issue remains: an AI tool was allegedly used in the article's creation, and the resulting piece contained demonstrably false information. This led to corrections, public criticism, and a significant blow to the newspaper's reputation. The specifics of the inaccurate report, including the date of publication and the nature of the factual errors, remain key aspects requiring further investigation and analysis. (Note: Specific details and links to the original article and subsequent reporting will be inserted here once the specific incident is identified and confirmed.)

- Type of AI tool allegedly used: (To be inserted once confirmed)

- Specific inaccuracies present in the report: (To be inserted once confirmed)

- The impact of the false information on readers/public perception: The publication of false information eroded public trust in the newspaper's credibility and journalistic integrity. This underscores the potential damage AI-generated misinformation can inflict on a news organization's reputation.

- The newspaper's response to the error: (To be inserted once confirmed – this should include details of any public statements, corrections, or internal reviews.)

Examining the Systemic Issues Behind the AI Reporting Error

The Chicago Sun-Times AI reporting fiasco wasn't simply a matter of a malfunctioning algorithm. A confluence of factors likely contributed to the publication of false information.

Human Error: Insufficient fact-checking is a primary suspect. Even with the assistance of AI, human oversight and verification remain critical. The pressure to publish quickly, coupled with a lack of understanding or training regarding AI's limitations, may have contributed to the oversight. Furthermore, a misinterpretation of the AI-generated output by human editors is a possible explanation.

AI Limitations: Current AI technologies, even the most sophisticated, are not infallible. AI tools used in journalism may lack the nuanced understanding of context and fact-checking capabilities that human journalists possess. Poorly constructed prompts or inadequate training data can lead to inaccurate or biased output.

Ethical Concerns: The incident raises significant ethical questions surrounding the use of AI in journalism. Transparency about the use of AI tools is paramount. News organizations have a moral obligation to be upfront with their audience about how AI is being integrated into their reporting processes. Accountability for inaccuracies arising from AI-generated content is another critical ethical consideration.

- Potential flaws in the AI's algorithm or training data: (Analysis of potential algorithm biases or data gaps needs to be added here based on confirmed details of the incident).

- Lack of human intervention/verification processes: The incident highlights a critical need for robust human editorial oversight to verify information generated by AI tools.

- The role of editorial oversight in preventing such errors: Strong editorial processes, including multiple levels of review and fact-checking, are essential to mitigate the risk of publishing false information.

- The need for better AI literacy among journalists: Journalists need training on how to effectively and ethically use AI tools, understanding their limitations and potential for error.

Preventing Future AI Reporting Errors: Best Practices and Recommendations

The Chicago Sun-Times incident serves as a cautionary tale, emphasizing the need for robust safeguards and best practices when integrating AI into journalistic workflows.

Best Practices: Thorough fact-checking, even for AI-generated content, remains non-negotiable. Human oversight at every stage of the process is critical. Transparency with readers about the use of AI in reporting is essential to maintaining trust. Establishing clear ethical guidelines for AI use in journalism is crucial.

Industry Response: (This section should detail any changes in industry policies, guidelines, or best practices that have emerged in response to similar incidents).

Future of AI Journalism: The long-term implications of AI in journalism are vast. While AI offers potential benefits in terms of efficiency and scale, it is crucial to proceed responsibly. Focus should be on developing AI tools with built-in safeguards against misinformation, and on educating journalists to use these tools ethically and effectively.

- Implementation of robust fact-checking mechanisms: Integrating sophisticated fact-checking tools and processes into the workflow is vital.

- Improved training for journalists on using AI tools ethically and effectively: News organizations should invest in comprehensive training programs for their journalists on the ethical use of AI.

- Development of AI tools with built-in safeguards against misinformation: Future AI tools should be designed with built-in features to detect and flag potential inaccuracies or biases.

- Increased transparency in the use of AI in news production: News organizations should be transparent with their audiences about their use of AI in the newsgathering and reporting process.

The Chicago Sun-Times Incident and the Future of Responsible AI in News

The Chicago Sun-Times incident underscores the critical need for responsible AI implementation in journalism. The error highlights the dangers of relying solely on AI-generated content without robust fact-checking and human oversight. The incident demonstrates that even reputable news organizations are vulnerable to the spread of misinformation if appropriate safeguards are not in place. The contributing factors – human error, AI limitations, and ethical considerations – all played a role. The lessons learned must lead to improved practices and a greater emphasis on transparency and accountability.

Let's ensure responsible AI use in journalism. Demand transparency and accountability from news organizations regarding their AI practices. Contact your local news outlets and voice your concerns about the use of AI in reporting. The future of credible news depends on it.

Featured Posts

-

Van Bankrekening Naar Tikkie Essentiele Nederlandse Bankzaken

May 22, 2025

Van Bankrekening Naar Tikkie Essentiele Nederlandse Bankzaken

May 22, 2025 -

New Thriller Echo Valley Images Featuring Sydney Sweeney And Julianne Moore Released

May 22, 2025

New Thriller Echo Valley Images Featuring Sydney Sweeney And Julianne Moore Released

May 22, 2025 -

Van Rekening Naar Tikkie Veilig En Efficient Betalen In Nederland

May 22, 2025

Van Rekening Naar Tikkie Veilig En Efficient Betalen In Nederland

May 22, 2025 -

Cau Ma Da Day Nhanh Tien Do Ket Noi Giao Thong Giua Dong Nai Va Binh Phuoc

May 22, 2025

Cau Ma Da Day Nhanh Tien Do Ket Noi Giao Thong Giua Dong Nai Va Binh Phuoc

May 22, 2025 -

Sydney Sweeney Glumi U Filmu Prema Redditu Prici

May 22, 2025

Sydney Sweeney Glumi U Filmu Prema Redditu Prici

May 22, 2025

Latest Posts

-

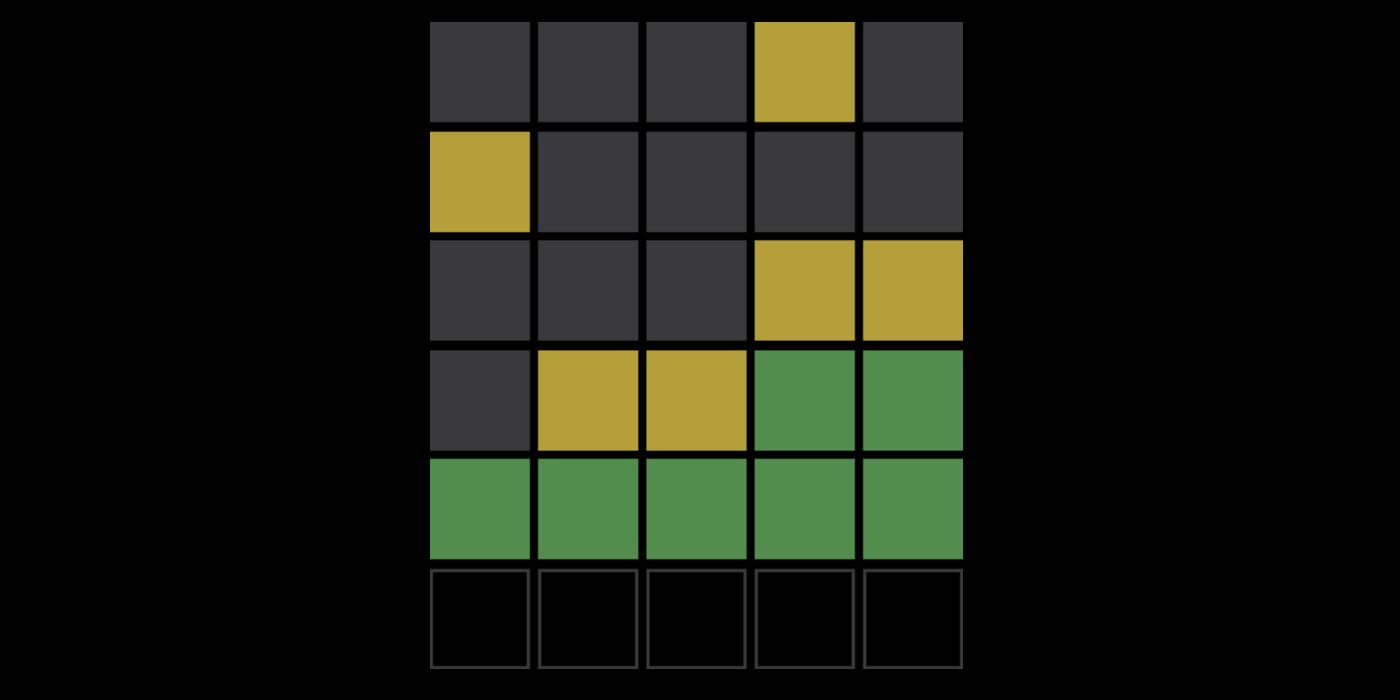

Wordle 1393 Hints And Solution April 12th

May 22, 2025

Wordle 1393 Hints And Solution April 12th

May 22, 2025 -

Wordle 1389 Hints And Answer April 8 Nyt

May 22, 2025

Wordle 1389 Hints And Answer April 8 Nyt

May 22, 2025 -

Year Over Year Gas Price Comparison Virginia Shows 50 Cent Decrease

May 22, 2025

Year Over Year Gas Price Comparison Virginia Shows 50 Cent Decrease

May 22, 2025 -

Gas Prices Surge Nearly 20 Cent Increase Per Gallon

May 22, 2025

Gas Prices Surge Nearly 20 Cent Increase Per Gallon

May 22, 2025 -

Wordle March 2nd 1352 Hints Clues And Answer

May 22, 2025

Wordle March 2nd 1352 Hints Clues And Answer

May 22, 2025