Misconceptions About AI Learning: Towards A Responsible Approach

Table of Contents

Myth 1: AI is Sentient and Conscious

Understanding AI's Limitations:

AI systems, even the most advanced, operate based on algorithms and data. They lack consciousness, self-awareness, and subjective experiences. This is a fundamental misunderstanding of how AI, even sophisticated machine learning and deep learning models, actually function. They are powerful tools for processing information and making predictions, but they don't "think" or "feel" in the human sense.

- AI mimics human intelligence through complex calculations, not genuine understanding. Think of a sophisticated chess-playing AI: it can beat grandmasters, but it doesn't understand the strategic nuances of the game in the way a human player does.

- Current AI lacks the capacity for genuine emotions, empathy, or independent thought. While AI can process and respond to emotional cues in text or images, it doesn't experience emotions itself.

- Anthropomorphizing AI can lead to unrealistic expectations and ethical concerns. Attributing human-like qualities to AI can create a false sense of trust or dependence, leading to potential risks.

Further Detail: It's important to distinguish between narrow or weak AI (designed for specific tasks, like facial recognition or spam filtering) and general or strong AI (hypothetical AI with human-level intelligence). Currently, all existing AI systems fall into the category of narrow AI. The Turing Test, a benchmark for AI intelligence, measures a machine's ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human, but it doesn't necessarily indicate true consciousness or understanding.

Myth 2: AI Learning is Completely Objective and Unbiased

The Role of Data Bias in AI Systems:

AI systems learn from the data they are trained on. If this data reflects existing societal biases, the AI will perpetuate and even amplify those biases. This is a critical issue in responsible AI development. The "garbage in, garbage out" principle applies forcefully here.

- Biased datasets can lead to discriminatory outcomes in areas like loan applications, facial recognition, and criminal justice. For example, a facial recognition system trained primarily on images of white faces might be less accurate at identifying people of color.

- Addressing bias requires careful data curation, algorithm design, and ongoing monitoring. This involves actively seeking diverse and representative datasets, designing algorithms that are less susceptible to bias, and constantly monitoring the system's performance for signs of unfairness.

- Techniques like fairness-aware algorithms and data augmentation can mitigate bias. Fairness-aware algorithms are designed to explicitly consider fairness metrics during training, while data augmentation techniques can help balance datasets by creating synthetic data points.

Further Detail: Examples of AI bias are numerous and concerning. Loan applications denied unfairly due to biased algorithms, discriminatory hiring practices reinforced by AI-powered recruitment tools, and inaccurate crime prediction models disproportionately affecting certain communities are all real-world consequences of neglecting data bias in AI development. Detecting and mitigating bias requires a multi-faceted approach involving data scientists, ethicists, and policymakers.

Myth 3: AI Learning is a "Set it and Forget it" Process

The Importance of Continuous Monitoring and Evaluation:

AI models require constant monitoring and refinement. They are not static entities but evolve based on new data and changing circumstances. This is often overlooked, leading to ineffective and potentially harmful AI systems.

- Regular updates and retraining are essential to maintain accuracy and effectiveness. As new data becomes available, AI models need to be updated to reflect these changes and maintain their performance.

- Monitoring for bias, errors, and unexpected behavior is crucial for responsible AI deployment. Continuous monitoring helps detect and address issues before they escalate and cause harm.

- Feedback loops and human oversight are vital to ensure AI systems align with ethical principles. Human oversight is crucial for ensuring that AI systems are used responsibly and ethically.

Further Detail: The concept of "model drift" describes the phenomenon where an AI model's performance degrades over time due to changes in the data or environment. Ongoing evaluation and retraining are necessary to prevent model drift and maintain the accuracy and reliability of AI systems. Human-in-the-loop systems, where humans are involved in the decision-making process, help ensure accountability and ethical considerations are taken into account.

Myth 4: AI Learning Will Necessarily Lead to Mass Unemployment

AI as a Tool for Augmentation, Not Replacement:

While AI may automate certain tasks, it is more likely to augment human capabilities than entirely replace human workers. This is a crucial distinction to make. AI is a tool; its impact on employment depends on how we choose to utilize it.

- AI can handle repetitive tasks, freeing up humans for more creative and strategic work. This can lead to increased productivity and efficiency, allowing humans to focus on tasks that require higher-level skills and critical thinking.

- New job roles will emerge in areas like AI development, maintenance, and ethical oversight. The growth of AI will create new employment opportunities in related fields.

- Reskilling and upskilling initiatives are crucial to adapt to the changing job market. Investing in education and training programs will help workers adapt to the evolving needs of the job market.

Further Detail: The transformative potential of AI necessitates proactive measures to mitigate potential job displacement. Focusing on human-AI collaboration, where humans and AI work together to achieve common goals, can unlock greater productivity and innovation. Investing in education and retraining programs that equip workers with the skills needed to thrive in an AI-driven economy is essential for a smooth transition.

Conclusion:

This article challenged common misconceptions surrounding AI learning, highlighting the importance of responsible AI development. Understanding the limitations of current AI technology, the role of data bias, the need for continuous monitoring, and the potential for human-AI collaboration are crucial steps towards harnessing the benefits of AI while mitigating its risks. Responsible AI learning requires a multidisciplinary approach.

Call to Action: Let's move towards a future where AI learning is developed and deployed responsibly. By understanding and addressing these misconceptions, we can foster a more ethical and beneficial integration of AI into our society. Learn more about responsible AI practices and contribute to the ongoing conversation around AI ethics and AI learning.

Featured Posts

-

Fentanyl And Princes Death A Report From March 26th

May 31, 2025

Fentanyl And Princes Death A Report From March 26th

May 31, 2025 -

When Do Glastonbury 2025 Resale Tickets Go On Sale Prices And More

May 31, 2025

When Do Glastonbury 2025 Resale Tickets Go On Sale Prices And More

May 31, 2025 -

Privacy Czar Probes Data Breach At Nova Scotia Power

May 31, 2025

Privacy Czar Probes Data Breach At Nova Scotia Power

May 31, 2025 -

Watch The Giro D Italia Live Stream Online Free Best Options

May 31, 2025

Watch The Giro D Italia Live Stream Online Free Best Options

May 31, 2025 -

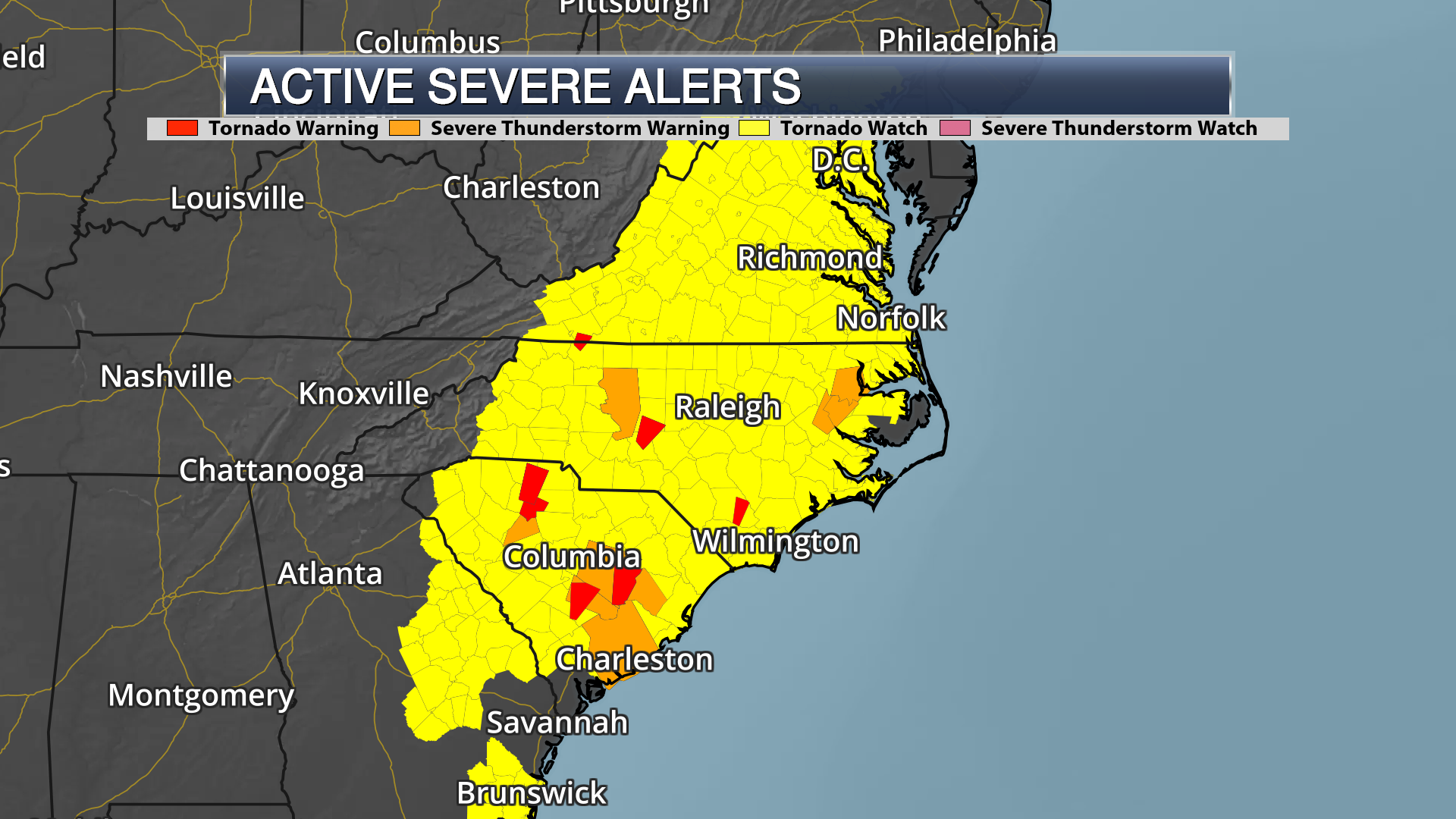

Severe Weather In The Carolinas How To Differentiate Active And Expired Storm Alerts

May 31, 2025

Severe Weather In The Carolinas How To Differentiate Active And Expired Storm Alerts

May 31, 2025