Navigating The Future Of AI After A Key Legislative Win

Table of Contents

Understanding the New AI Regulatory Landscape

The AI Accountability Act introduces a significant shift in how AI is developed, deployed, and used. Understanding its implications is crucial for navigating the future of AI.

Key Provisions of the Legislation

The legislation contains several crucial clauses designed to promote responsible AI development. These provisions aim to balance innovation with safety and ethical considerations.

-

Data Privacy: Stricter regulations on data collection, storage, and usage, particularly for sensitive personal information used in AI training. This includes enhanced consent mechanisms and limitations on data retention. This necessitates businesses to review their data handling practices and potentially implement new data anonymization techniques.

-

Algorithmic Transparency: Requirements for developers to provide explanations for how their AI systems arrive at specific decisions, particularly in high-stakes applications like loan approvals or medical diagnoses. This promotes explainable AI (XAI) and enables scrutiny of algorithmic processes.

-

Liability Frameworks: Clearer guidelines on liability in cases where AI systems cause harm or make incorrect decisions. This clarifies who is responsible – the developer, the user, or both – and establishes a framework for redress. This will encourage businesses to invest in robust testing and quality assurance processes.

The implications of these provisions are far-reaching, impacting the entire AI lifecycle, from data acquisition to system deployment and ongoing monitoring.

Impact on Different AI Sectors

The AI Accountability Act will affect various sectors differently, requiring tailored approaches to compliance.

-

Healthcare: Hospitals and medical institutions will need to adapt their AI-powered diagnostic tools and treatment protocols to meet transparency and liability requirements. This may involve more rigorous testing and validation procedures.

-

Finance: Financial institutions will face stricter regulations around algorithmic trading, credit scoring, and fraud detection, requiring enhanced transparency and audit trails. This necessitates significant investment in compliance technology and processes.

-

Transportation: Autonomous vehicle developers will need to demonstrate the safety and reliability of their systems, complying with new liability frameworks and transparency requirements. This likely means incorporating more robust testing and safety features.

Each sector faces unique challenges and opportunities in adapting to this new regulatory environment. Proactive compliance will be key to maintaining competitiveness and avoiding legal repercussions.

Navigating Ethical Considerations in AI Development

The AI Accountability Act directly addresses several critical ethical concerns surrounding AI.

Addressing Algorithmic Bias and Fairness

The legislation incorporates measures to mitigate algorithmic bias, aiming to promote fairness and equity in AI systems.

-

Audits: Mandatory regular audits of AI systems to identify and address potential biases in data and algorithms. This ensures ongoing monitoring and remediation of any discriminatory outcomes.

-

Diversity in Development Teams: Encouraging diversity within AI development teams to reduce unconscious biases and foster more inclusive design processes. This promotes a wider range of perspectives in AI development.

-

Bias Detection and Mitigation Techniques: The legislation promotes research and development of innovative methods for detecting and mitigating bias within algorithms, requiring developers to incorporate these methods into their design and development processes.

These measures aim to create AI systems that are fair, equitable, and free from discriminatory outcomes.

Ensuring AI Transparency and Accountability

The act emphasizes the need for transparency and accountability in AI systems, requiring mechanisms for understanding how decisions are made.

-

Explainable AI (XAI): The legislation encourages the development and deployment of explainable AI techniques, allowing users and regulators to understand the reasoning behind AI-driven decisions.

-

Data Provenance: Requirements for tracking the origin and handling of data used to train AI systems. This ensures data integrity and enables audits to trace the source of any potential bias or error.

-

Auditable Decision Trails: Mechanisms for recording and reviewing the decision-making processes of AI systems, allowing for thorough investigation in case of errors or misuse.

Transparency and accountability are essential for building public trust and ensuring the responsible use of AI.

Future Trends and Opportunities in the Post-Legislative Era

The AI Accountability Act is not just a set of restrictions; it also presents opportunities for innovation and collaboration.

Innovation and Investment in Compliant AI

The legislation is likely to drive innovation in areas that prioritize ethical and responsible AI development.

-

Federated Learning: This technique allows AI models to be trained on decentralized data, reducing privacy risks and enabling collaboration while maintaining data security.

-

Differential Privacy: Methods that add noise to data to protect individual privacy while still allowing for meaningful analysis, complying with stringent data protection requirements.

-

Robust Testing and Validation Procedures: Investment in rigorous testing and validation methods to ensure the safety, reliability, and fairness of AI systems. This may also lead to advances in AI safety research.

This shift toward compliant AI will attract significant investment in ethical AI development and innovation.

The Role of Collaboration and International Standards

Establishing global best practices for AI governance is crucial to maximizing the benefits of AI while minimizing its risks.

-

International Cooperation: Collaboration between governments, researchers, and businesses is needed to harmonize regulations and establish shared ethical guidelines for AI development.

-

Data Sharing Agreements: Agreements that facilitate data sharing for AI research and development while respecting privacy and security requirements.

-

Harmonization of Regulations: Collaboration to create a consistent set of AI regulations across different jurisdictions to avoid fragmentation and promote global AI innovation.

International cooperation is vital for ensuring responsible and sustainable development of AI worldwide.

Charting a Course Through the Future of AI

The AI Accountability Act profoundly impacts AI development, deployment, and ethical considerations. It necessitates a proactive approach to compliance, emphasizing transparency, accountability, and ethical considerations in every stage of the AI lifecycle. Navigating this new regulatory landscape responsibly is crucial for fostering innovation while mitigating potential risks. Understanding and proactively engaging with these changes is crucial for navigating the future of AI. Learn more about the specifics of the AI Accountability Act and how it will affect your industry by [link to relevant resource].

Featured Posts

-

Lou Gala The Decamerons Breakout Star Biography And Career

May 20, 2025

Lou Gala The Decamerons Breakout Star Biography And Career

May 20, 2025 -

March 18 2025 Nyt Mini Crossword Answers And Clues

May 20, 2025

March 18 2025 Nyt Mini Crossword Answers And Clues

May 20, 2025 -

Efl Trophy Victory Darren Ferguson Leads Peterborough To Historic Win

May 20, 2025

Efl Trophy Victory Darren Ferguson Leads Peterborough To Historic Win

May 20, 2025 -

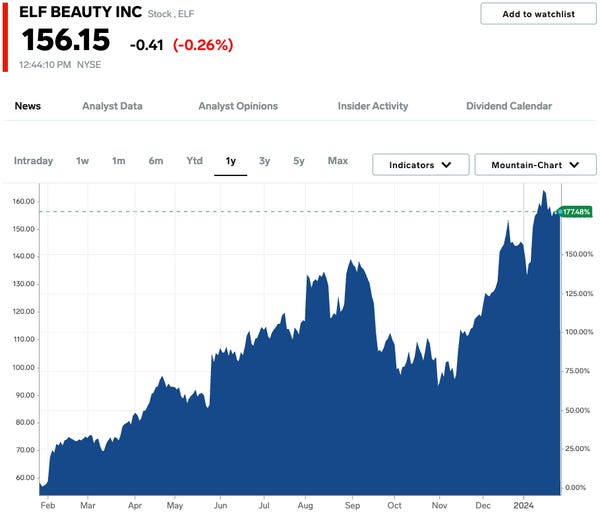

Analyzing Qbts Stock Performance Before And After Earnings

May 20, 2025

Analyzing Qbts Stock Performance Before And After Earnings

May 20, 2025 -

The Fight For Clean Energy Obstacles And Opportunities In A Booming Sector

May 20, 2025

The Fight For Clean Energy Obstacles And Opportunities In A Booming Sector

May 20, 2025

Latest Posts

-

United Kingdom Tory Wifes Imprisonment Confirmed For Anti Migrant Remarks

May 21, 2025

United Kingdom Tory Wifes Imprisonment Confirmed For Anti Migrant Remarks

May 21, 2025 -

Lawsuit Update Ex Tory Councillors Wifes Racial Hatred Tweet Case

May 21, 2025

Lawsuit Update Ex Tory Councillors Wifes Racial Hatred Tweet Case

May 21, 2025 -

Court To Decide Ex Tory Councillors Wife And The Racial Hatred Tweet

May 21, 2025

Court To Decide Ex Tory Councillors Wife And The Racial Hatred Tweet

May 21, 2025 -

Court Upholds Sentence Against Lucy Connolly For Racial Hatred

May 21, 2025

Court Upholds Sentence Against Lucy Connolly For Racial Hatred

May 21, 2025 -

Ex Tory Councillors Wifes Racial Hatred Tweet Appeal The Latest

May 21, 2025

Ex Tory Councillors Wifes Racial Hatred Tweet Appeal The Latest

May 21, 2025