Understanding AI's Learning Process: A Path To Responsible AI Development

Table of Contents

Supervised Learning: Training AI with Labeled Data

Supervised learning forms the bedrock of many AI applications. Its core principle involves training a model on a labeled dataset—a collection of data points where each point is tagged with the correct answer or outcome. The model learns to map inputs to outputs based on this labeled data, enabling it to predict outcomes for new, unseen data. Common supervised learning algorithms include linear regression, support vector machines (SVMs), and decision trees.

- Advantages: High accuracy on specific tasks, relatively easy to understand and implement.

- Disadvantages: Requires large, meticulously labeled datasets, which can be expensive and time-consuming to create. Prone to bias if the training data reflects existing societal biases.

- Example: Image recognition systems are often trained using vast datasets of labeled images, allowing the AI to accurately identify objects like cats, dogs, or cars.

The ethical implications of biased datasets in supervised learning are significant. If the training data predominantly features images of one race or gender, the resulting model might exhibit discriminatory behavior when applied to real-world scenarios. Ensuring data diversity and carefully curating datasets are critical steps in mitigating this risk.

Unsupervised Learning: Discovering Patterns in Unlabeled Data

Unlike supervised learning, unsupervised learning tackles datasets without pre-assigned labels. The algorithm's task is to identify patterns, structures, or anomalies within the data. Common techniques include clustering (grouping similar data points), dimensionality reduction (simplifying complex datasets), and association rule mining (discovering relationships between variables).

- Advantages: Uncovers hidden patterns and insights that might be missed by human analysts, useful for exploratory data analysis.

- Disadvantages: Results can be difficult to interpret, less precise than supervised learning, and the discovered patterns may not always be meaningful.

- Example: Customer segmentation based on purchasing behavior is a classic application of unsupervised learning. An algorithm can group customers with similar buying habits, enabling targeted marketing strategies.

Even in unsupervised learning, the potential for unintended biases exists. Inherent biases in the data can lead the algorithm to discover and reinforce existing societal inequalities. Careful data analysis and validation are crucial to identify and mitigate such risks.

Reinforcement Learning: Learning Through Trial and Error

Reinforcement learning focuses on training an AI agent to interact with an environment and learn optimal strategies through trial and error. The agent receives rewards for desirable actions and penalties for undesirable ones, learning to maximize its cumulative reward over time. Common algorithms include Q-learning and SARSA.

- Advantages: Adaptable to dynamic environments, learns optimal policies through experience.

- Disadvantages: Can be computationally expensive, requires careful design of reward functions to guide the learning process. Can be difficult to debug and understand the learned policies.

- Example: Training AI agents to play games like Go or chess, or controlling robots in complex environments, are prime examples of reinforcement learning.

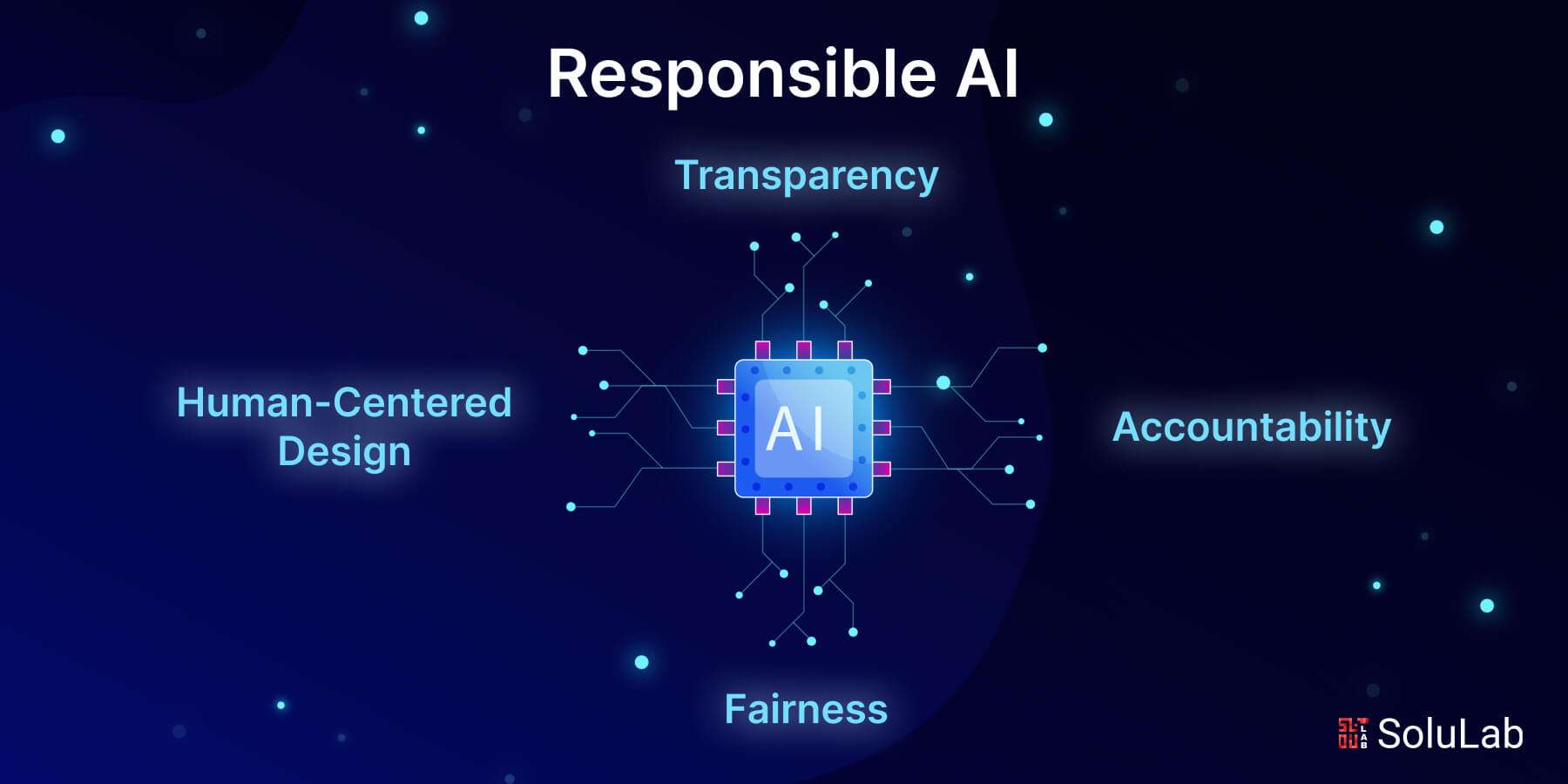

Addressing Bias in AI Learning Processes

Bias in AI training data and algorithms is a pervasive issue. This bias can stem from various sources, including historical data reflecting societal inequalities or flawed algorithms that amplify existing prejudices. Mitigating bias requires a multi-pronged approach:

- Data Augmentation: Expanding the training dataset with diverse and representative data points.

- Algorithmic Fairness: Designing algorithms that explicitly account for fairness constraints.

- Careful Dataset Curation: Rigorous review and cleaning of datasets to identify and remove biased data.

- Human Oversight: Continuous monitoring and evaluation of AI systems for signs of bias.

The Importance of Transparency and Explainability in AI

Explainable AI (XAI) is paramount for building trust and accountability in AI systems. Understanding how an AI arrives at its conclusions is vital, especially in high-stakes applications like healthcare and finance. Techniques for achieving greater transparency include:

- Model Interpretability: Developing models whose decision-making processes are easily understood.

- Feature Importance Analysis: Identifying the factors that most strongly influence the AI's predictions.

- Visualization Techniques: Creating visual representations of the AI's internal workings.

- Clear Documentation: Providing comprehensive documentation about the AI system's training data, algorithms, and limitations.

Building a Future with Responsible AI

We've explored three primary AI learning processes: supervised, unsupervised, and reinforcement learning, each with unique strengths and weaknesses. Successfully deploying AI requires understanding these processes and actively addressing the pervasive issue of bias. Ensuring transparency and explainability is crucial for building trust and accountability.

By understanding AI's learning process, we can pave the way for a future where AI benefits all of humanity. Continue your journey into responsible AI development by exploring [link to relevant resources].

Featured Posts

-

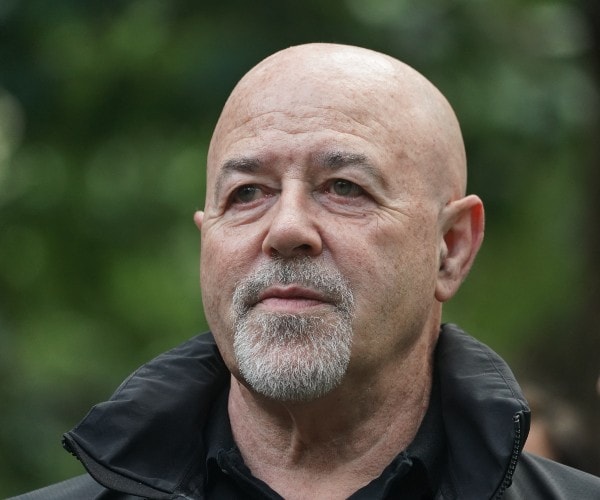

Who Is Bernard Keriks Wife Hala Matli Meet His Children Too

May 31, 2025

Who Is Bernard Keriks Wife Hala Matli Meet His Children Too

May 31, 2025 -

Gaya Berpakaian Miley Cyrus Sebuah Cerita Yang Terungkap

May 31, 2025

Gaya Berpakaian Miley Cyrus Sebuah Cerita Yang Terungkap

May 31, 2025 -

Analisis Busana Miley Cyrus Refleksi Diri Dan Ekspresi Kreatif

May 31, 2025

Analisis Busana Miley Cyrus Refleksi Diri Dan Ekspresi Kreatif

May 31, 2025 -

Ex Nypd Commissioner Kerik Hospitalized Full Recovery Expected

May 31, 2025

Ex Nypd Commissioner Kerik Hospitalized Full Recovery Expected

May 31, 2025 -

Embrace Minimalism A 30 Day Plan For A Simpler Life

May 31, 2025

Embrace Minimalism A 30 Day Plan For A Simpler Life

May 31, 2025