Do Algorithms Contribute To Mass Shooter Radicalization? Holding Tech Companies Accountable.

Table of Contents

The Echo Chamber Effect and Algorithmic Reinforcement

Social media platforms and online search engines utilize sophisticated algorithms to personalize user experiences. While seemingly innocuous, these algorithms can create dangerous "echo chambers" that reinforce extremist views and isolate individuals susceptible to radicalization. The very design of these systems, prioritizing engagement metrics above all else, can lead to the amplification of harmful content.

- Examples of algorithms prioritizing engagement over safety: Algorithms often prioritize content that elicits strong emotional responses, even if that content is hateful, violent, or promotes conspiracy theories. This prioritization incentivizes the creation and spread of such content, leading to its wider dissemination.

- Filter bubbles and limited exposure to diverse perspectives: Personalized content feeds create "filter bubbles," limiting exposure to opposing viewpoints and reinforcing pre-existing beliefs. This can lead to the entrenchment of extremist ideologies and a lack of critical self-reflection.

- The role of personalized content recommendations in fostering radicalization: Recommendation algorithms can subtly nudge users towards increasingly extreme content, creating a pathway towards radicalization that is difficult to detect and counter. This "radicalization pipeline" is often invisible to the user, making intervention challenging. The algorithm's bias towards engagement can inadvertently steer individuals down this dangerous path.

Keywords: algorithm bias, echo chambers, online radicalization, content moderation, social media algorithms.

The Spread of Misinformation and Conspiracy Theories

Algorithms play a crucial role in the rapid dissemination of misinformation and conspiracy theories, which are often key components of extremist ideologies. The speed and scale at which false narratives can spread online far outpaces traditional media, making it incredibly difficult to counteract their influence.

- Examples of algorithms promoting false narratives and hateful content: Algorithmic amplification of false narratives and hateful content can quickly transform fringe ideas into mainstream beliefs, creating a fertile ground for radicalization.

- The difficulty in moderating the vast amount of online content: The sheer volume of content online makes effective content moderation an immense challenge. Algorithms struggle to distinguish between legitimate debate and harmful content, often failing to identify and remove extremist material quickly enough.

- The impact of deepfakes and manipulated media on radicalization: The proliferation of deepfakes and other forms of manipulated media further complicates the issue, making it increasingly difficult to discern truth from falsehood, and potentially fueling extremist narratives.

Keywords: misinformation, disinformation, conspiracy theories, deepfakes, online violence, extremist content.

The Role of Online Communities and Forums

Algorithms also facilitate the formation and growth of online communities and forums where extremist ideologies are shared and nurtured. These online spaces can provide a sense of belonging and validation for individuals who feel alienated or marginalized, further contributing to their radicalization.

- Examples of online spaces used for planning and coordination of violent acts: Encrypted communication platforms and online forums provide spaces for planning and coordinating violent acts, allowing extremists to connect and organize without easy detection.

- The challenges of identifying and disrupting these online networks: Identifying and disrupting these online networks is extremely difficult due to the use of encryption, anonymity features, and the constantly evolving tactics of extremist groups.

- The importance of proactive content moderation strategies: Proactive content moderation strategies that go beyond simply reacting to reported content are crucial to preventing the formation and growth of these dangerous online communities. This requires investment in technology and human resources.

Keywords: online communities, extremist forums, online hate speech, terrorist recruitment, dark web, encrypted communication.

Holding Tech Companies Accountable: Legal and Ethical Responsibilities

The power of algorithms in shaping online discourse demands that tech companies shoulder a significant responsibility in preventing the use of their platforms for radicalization. This requires a multi-pronged approach encompassing legal, ethical, and technological considerations.

- Discussion of potential legal frameworks and regulations: Governments need to establish clear legal frameworks and regulations that hold tech companies accountable for the content hosted on their platforms and the algorithms that shape user experiences.

- The role of transparency and accountability in algorithm design: Greater transparency in algorithm design and implementation is crucial, allowing for independent audits and assessment of potential biases. Accountability mechanisms need to be established to ensure that algorithms are designed and used responsibly.

- The need for improved content moderation policies and practices: Tech companies need to invest significantly in improving their content moderation policies and practices, employing more human moderators and developing more sophisticated AI tools to detect and remove harmful content.

- Ethical considerations for algorithm design and implementation: Ethical considerations must guide the design and implementation of algorithms, placing a higher priority on safety and well-being than on maximizing engagement.

Keywords: tech regulation, content moderation, algorithmic accountability, corporate social responsibility, data privacy, freedom of speech.

Conclusion

The evidence suggests a disturbing link between algorithms, online radicalization, and mass shootings. The echo chambers created by recommendation systems, the rapid spread of misinformation, and the facilitation of extremist online communities all contribute to a dangerous environment. Tech companies, as architects of these systems, have a moral and potentially legal obligation to address this issue. We must demand greater accountability – preventing algorithmic radicalization requires a collective effort. Contact your representatives, support organizations combating online extremism, and participate in informed discussions about responsible algorithm design. Holding tech companies accountable for algorithmic bias is not just a technical challenge; it is a societal imperative. The urgency of this issue demands immediate and sustained action to combat the problem of algorithms contributing to mass shooter radicalization.

Featured Posts

-

First Look 2025 Kawasaki Ninja 650 Krt Edition Motorcycle

May 30, 2025

First Look 2025 Kawasaki Ninja 650 Krt Edition Motorcycle

May 30, 2025 -

Los Angeles Wildfires A Reflection Of Our Times Through Disaster Betting Markets

May 30, 2025

Los Angeles Wildfires A Reflection Of Our Times Through Disaster Betting Markets

May 30, 2025 -

Dana White Faces Pressure Ufc Vet Demands 29 Million For Jon Jones Fight

May 30, 2025

Dana White Faces Pressure Ufc Vet Demands 29 Million For Jon Jones Fight

May 30, 2025 -

Navigating Economic Uncertainty Inflation And Unemployment Challenges

May 30, 2025

Navigating Economic Uncertainty Inflation And Unemployment Challenges

May 30, 2025 -

Gorillazs 25th Anniversary House Of Kong Exhibition And London Shows Announced

May 30, 2025

Gorillazs 25th Anniversary House Of Kong Exhibition And London Shows Announced

May 30, 2025

Latest Posts

-

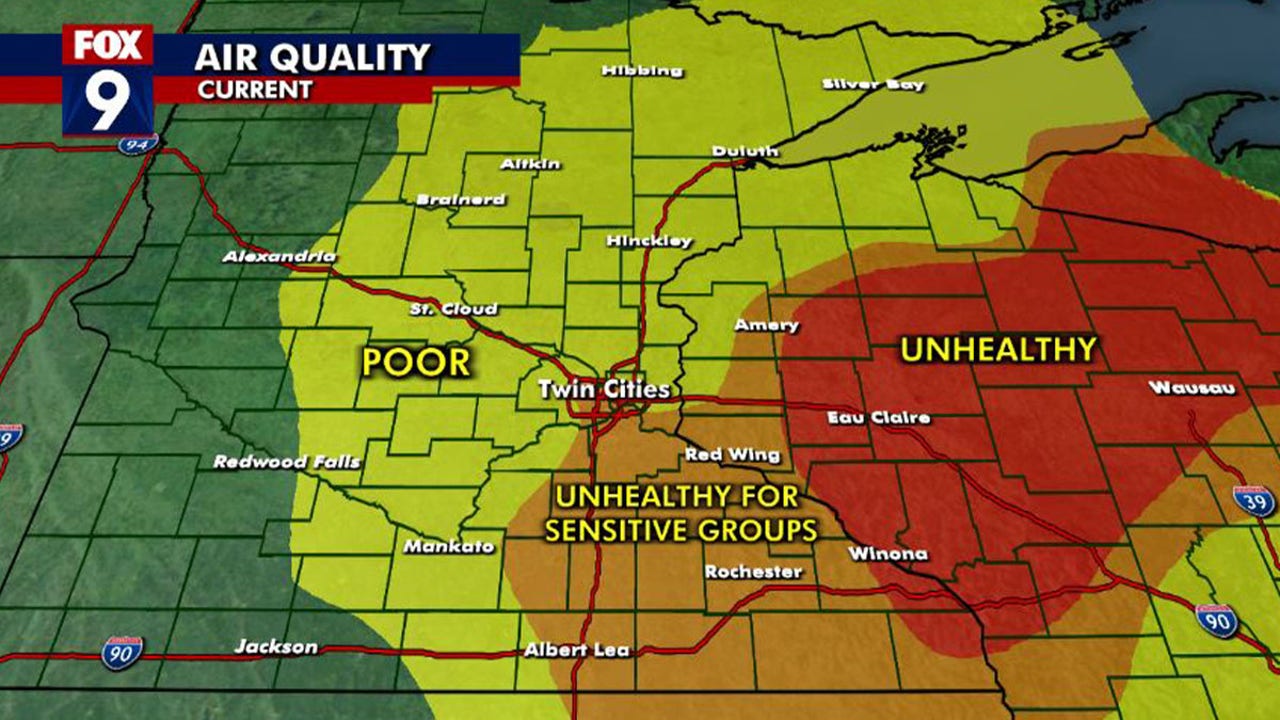

Health Impacts Of Canadian Wildfire Smoke On Minnesota

May 31, 2025

Health Impacts Of Canadian Wildfire Smoke On Minnesota

May 31, 2025 -

Dangerous Air Quality In Minnesota Due To Canadian Wildfires

May 31, 2025

Dangerous Air Quality In Minnesota Due To Canadian Wildfires

May 31, 2025 -

Canadian Wildfires Cause Dangerous Air In Minnesota

May 31, 2025

Canadian Wildfires Cause Dangerous Air In Minnesota

May 31, 2025 -

Eastern Manitoba Wildfires Rage Crews Struggle For Control

May 31, 2025

Eastern Manitoba Wildfires Rage Crews Struggle For Control

May 31, 2025 -

Homes Lost Lives Disrupted The Newfoundland Wildfire Crisis

May 31, 2025

Homes Lost Lives Disrupted The Newfoundland Wildfire Crisis

May 31, 2025