FTC Probes OpenAI's ChatGPT: Privacy And Data Concerns

Table of Contents

ChatGPT's Data Collection Practices and Transparency

ChatGPT, a powerful large language model (LLM), learns from massive datasets of text and code. This learning process inherently involves collecting user data—prompts, responses, and even interaction patterns. However, the level of transparency OpenAI provides regarding these data practices is a significant concern. Their privacy policies, while existing, are often criticized for being dense and difficult for the average user to understand. This lack of clarity raises significant questions about informed consent.

- Lack of explicit consent for data usage in certain scenarios: Users might unknowingly agree to data collection practices they wouldn't accept if fully understood.

- Potential for data scraping and unauthorized data collection: Concerns exist about the possibility of ChatGPT inadvertently collecting or retaining more data than is necessary or disclosed.

- Ambiguity regarding data retention policies: The length of time OpenAI retains user data is not always explicitly stated, leading to uncertainty about long-term privacy implications.

- Issues with data anonymization and de-identification: Even if anonymized, there's always the risk of re-identification, potentially exposing users to privacy vulnerabilities. The effectiveness of OpenAI's anonymization techniques is subject to scrutiny.

Bias and Discrimination in ChatGPT's Outputs

A major concern surrounding ChatGPT and similar LLMs is the potential for bias and discrimination in their outputs. These models are trained on vast datasets, which often reflect existing societal biases. As a result, ChatGPT can inadvertently generate responses that perpetuate harmful stereotypes or discriminate against certain groups.

- Examples of biased responses generated by ChatGPT: Reports of ChatGPT producing sexist, racist, or otherwise discriminatory responses have fueled concerns about its ethical implications.

- The difficulty in mitigating bias in large language models: Removing bias from training data is a complex and ongoing challenge for AI developers.

- The potential for perpetuating societal inequalities: Biased AI outputs can reinforce existing inequalities and exacerbate societal problems.

Security Risks Associated with ChatGPT

The sheer volume of data handled by ChatGPT makes it a potentially attractive target for malicious actors. Security breaches could expose sensitive user information, leading to severe consequences.

- Potential for data leaks due to insufficient security measures: Robust security protocols are crucial to prevent unauthorized access and data leaks.

- Risks of unauthorized access to user data: A breach could result in identity theft, financial fraud, or other forms of harm to users.

- The need for robust security protocols to protect user privacy: OpenAI needs to invest heavily in security infrastructure and regularly audit its systems to minimize risks.

The FTC's Investigation and Potential Outcomes

The FTC's investigation into OpenAI's ChatGPT is wide-ranging, examining the company's data collection practices, its efforts to mitigate bias, and the overall security of its systems. The potential outcomes of this investigation are far-reaching.

- Potential fines and legal repercussions: If OpenAI is found to have violated privacy laws, it could face significant financial penalties and legal challenges.

- Pressure on OpenAI to improve its data handling practices: The investigation is likely to force OpenAI to enhance its data security measures and increase transparency.

- Increased scrutiny of other AI companies and their data practices: The FTC's actions are setting a precedent for the regulation of AI and could lead to increased scrutiny of other AI companies.

Navigating the Future of AI and Data Privacy with ChatGPT

The FTC's investigation into OpenAI's ChatGPT underscores the critical need for responsible AI development and robust data protection measures. The concerns raised—data collection practices, bias, security risks—highlight the complex challenges inherent in creating and deploying powerful AI systems. Understanding the implications of the FTC's probe into OpenAI's ChatGPT is crucial. We need stronger regulations, greater transparency, and a renewed focus on ethical considerations in the development and deployment of AI technologies. Learn more about protecting your data when using AI tools like ChatGPT and demand greater transparency and accountability from AI developers regarding ChatGPT and similar technologies. The future of AI depends on it.

Featured Posts

-

Open Ai Faces Ftc Probe Implications For Ai Development

May 24, 2025

Open Ai Faces Ftc Probe Implications For Ai Development

May 24, 2025 -

Amsterdam Exchange Plunges 7 On Trade War Worries

May 24, 2025

Amsterdam Exchange Plunges 7 On Trade War Worries

May 24, 2025 -

Dylan Dreyers New Post Featuring Husband Brian Fichera Stirs Fan Reaction

May 24, 2025

Dylan Dreyers New Post Featuring Husband Brian Fichera Stirs Fan Reaction

May 24, 2025 -

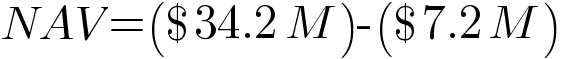

Analyzing The Net Asset Value Nav Of The Amundi Djia Ucits Etf

May 24, 2025

Analyzing The Net Asset Value Nav Of The Amundi Djia Ucits Etf

May 24, 2025 -

Anchor Brewing Companys Closure A Legacy Concludes After 127 Years

May 24, 2025

Anchor Brewing Companys Closure A Legacy Concludes After 127 Years

May 24, 2025

Latest Posts

-

Co Hosts Of Today Show Address Long Term Anchor Absence

May 24, 2025

Co Hosts Of Today Show Address Long Term Anchor Absence

May 24, 2025 -

Concerned Co Hosts Address Popular Today Show Anchors Absence

May 24, 2025

Concerned Co Hosts Address Popular Today Show Anchors Absence

May 24, 2025 -

Today Show Anchors Absence A Message From Her Co Hosts

May 24, 2025

Today Show Anchors Absence A Message From Her Co Hosts

May 24, 2025 -

Explanation For Anchors Absence From Today Show Co Hosts Speak Out

May 24, 2025

Explanation For Anchors Absence From Today Show Co Hosts Speak Out

May 24, 2025 -

Today Show Co Hosts Reveal Concerns During Anchors Absence

May 24, 2025

Today Show Co Hosts Reveal Concerns During Anchors Absence

May 24, 2025