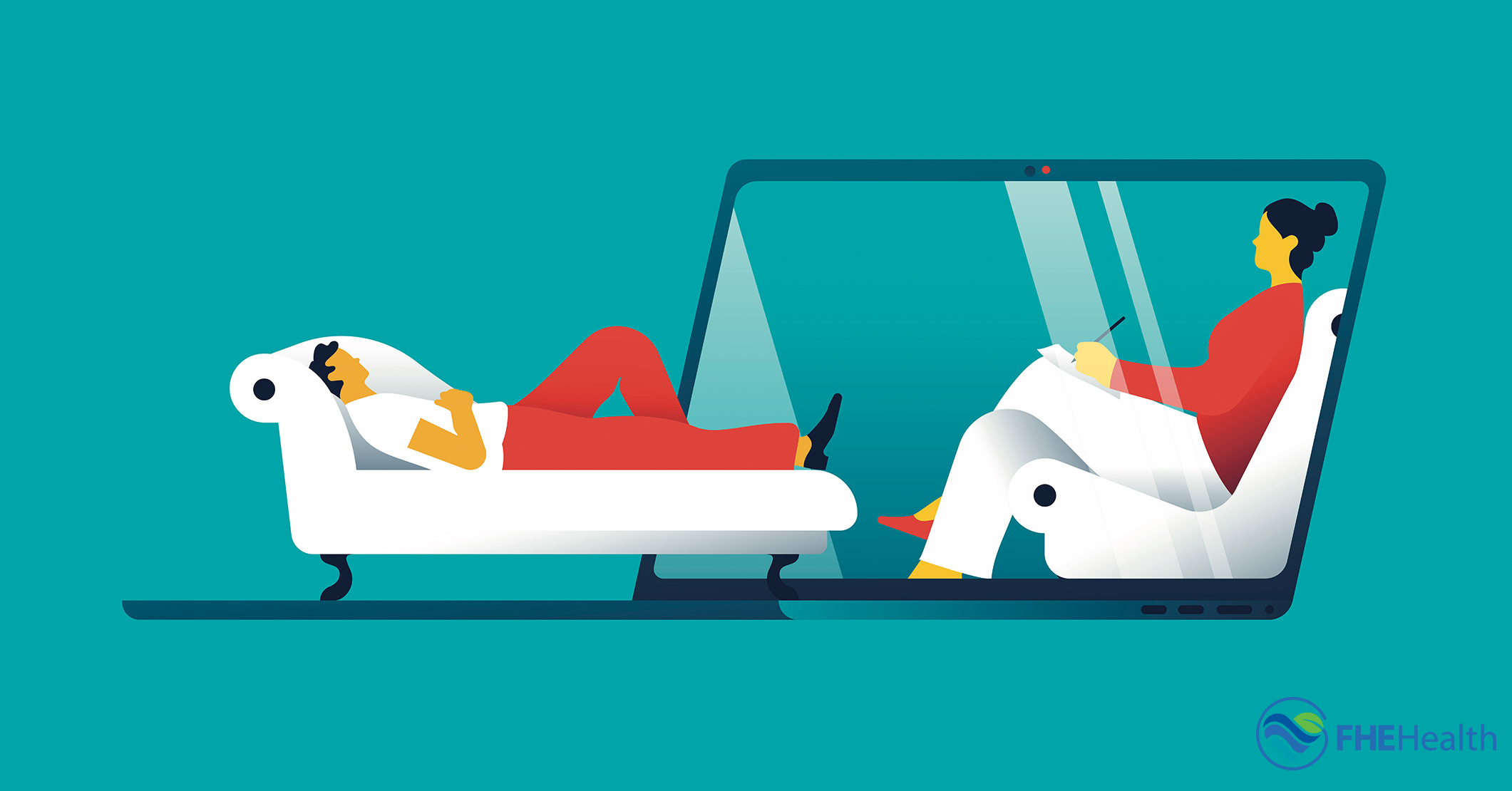

The Ethics Of AI Therapy: Surveillance Risks In Authoritarian Regimes

Table of Contents

Data Privacy Violations in AI Therapy

AI therapy platforms collect vast amounts of sensitive personal data, creating significant vulnerabilities. This data includes mental health histories, emotional states, personal relationships, and even intimate details of users' lives. This sensitive information is highly vulnerable to breaches and misuse, raising serious ethical questions about data privacy.

Data Collection and Storage

The sheer volume of data collected by AI therapy applications is staggering. This raises several critical concerns:

- Lack of robust encryption and data security protocols: Many AI therapy applications lack the robust encryption and security protocols necessary to protect sensitive user data from unauthorized access. This makes them vulnerable to cyberattacks and data breaches.

- Potential for unauthorized access by government entities or hackers: Weak security measures leave the door open for both state actors and malicious hackers to gain access to this highly personal information. This data could be used for blackmail, extortion, or other nefarious purposes.

- Unclear data ownership and consent issues: The terms of service and data usage policies of many AI therapy platforms are often unclear, leaving users uncertain about who owns their data and how it will be used. Informed consent is often lacking, raising significant ethical red flags.

Government Surveillance and Data Exploitation

Authoritarian governments pose a particularly dangerous threat. They can leverage AI therapy data for surveillance purposes, identifying and targeting dissidents, political opponents, or marginalized groups based on their emotional vulnerabilities or expressed thoughts. This represents a severe violation of privacy and freedom of expression.

- Forced use of AI therapy apps as a tool for monitoring citizens' mental health: Governments might mandate the use of AI therapy apps, effectively turning them into tools for mass surveillance of citizens' mental states.

- Profiling individuals based on their therapeutic conversations and identifying potential threats to the regime: AI algorithms could be used to analyze conversation transcripts, identifying individuals who express dissenting opinions or pose a perceived threat to the government.

- Using AI-generated insights to predict and suppress dissent before it manifests: Governments might use AI-generated insights to preemptively identify and suppress dissent before it can organize or gain traction.

Lack of Transparency and Accountability in AI Therapy Algorithms

The "black box" nature of many AI algorithms used in therapy raises significant ethical concerns. The opacity of these algorithms hinders our ability to understand how they reach their conclusions, making it difficult to assess for bias, errors, and potential for manipulation.

The "Black Box" Problem

The lack of transparency in AI therapy algorithms poses several challenges:

- Potential for algorithmic bias to disproportionately affect certain demographic groups: AI algorithms can reflect and amplify existing societal biases, potentially leading to unfair or discriminatory outcomes for certain demographic groups.

- Difficulty in holding developers and platforms accountable for flawed or biased algorithms: The opacity of these algorithms makes it difficult to identify and rectify biases, making it challenging to hold developers and platforms accountable for their shortcomings.

- Lack of independent oversight and regulation of AI therapy algorithms: The absence of robust regulatory frameworks and independent oversight mechanisms further exacerbates the lack of accountability and transparency.

The Potential for Manipulation

Authoritarian regimes can manipulate AI therapy algorithms to achieve their political goals:

- Customization of therapy recommendations to align with government ideology: Algorithms could be manipulated to provide therapy recommendations that subtly reinforce government narratives and ideologies.

- Use of AI to identify and target individuals susceptible to manipulation: AI could be used to identify individuals who are more vulnerable to manipulation based on their emotional state or therapeutic conversations.

- Spread of misinformation and disinformation through seemingly therapeutic interactions: AI-powered chatbots could be used to subtly disseminate propaganda and misinformation under the guise of therapeutic support.

Mitigation Strategies and Ethical Guidelines for AI Therapy

Addressing the ethical challenges of AI therapy requires a multi-pronged approach involving strong data protection laws, increased transparency in algorithms, and international cooperation.

Strong Data Protection Laws and Regulations

Robust legal frameworks are essential to prevent the misuse of data collected through AI therapy platforms:

- Enacting legislation that guarantees user consent and data ownership: Clear legislation is needed to define data ownership, ensure informed consent, and protect user privacy.

- Establishing independent oversight bodies to monitor compliance and enforce regulations: Independent regulatory bodies are crucial to ensure compliance with data protection laws and hold organizations accountable for violations.

- Implementing stringent data security protocols and encryption standards: Stringent security measures are paramount to protect sensitive user data from unauthorized access and breaches.

Promoting Transparency and Explainability in AI Algorithms

Transparency and explainability are crucial to building trust and accountability in AI therapy:

- Employing techniques like explainable AI (XAI) to make algorithmic decision-making more understandable: XAI techniques can shed light on the decision-making processes of AI algorithms, increasing transparency and accountability.

- Open-source algorithms and code to enhance scrutiny and accountability: Open-source algorithms allow for greater scrutiny and independent audits, enhancing transparency and accountability.

- Collaborative efforts among researchers, developers, and policymakers to improve algorithmic fairness and transparency: Collaboration among stakeholders is crucial to address the ethical challenges of AI therapy and develop solutions for greater fairness and transparency.

Conclusion

The ethical implications of AI therapy, particularly concerning AI therapy surveillance risks in authoritarian regimes, demand urgent attention. The potential for data privacy violations, algorithmic manipulation, and government overreach requires robust ethical guidelines, strong data protection laws, and increased transparency in AI algorithms. We must prioritize the protection of vulnerable individuals and ensure that this powerful technology is used responsibly. Further research and discussion on the ethical considerations of AI therapy surveillance are critical to preventing its misuse and safeguarding individual rights. We need a global conversation about the ethical framework needed to ensure that AI therapy remains a tool for good, promoting mental well-being instead of enabling oppression. Let's work together to ensure the responsible development and deployment of AI therapy technologies.

Featured Posts

-

Creatine Supplements Everything You Need To Know

May 15, 2025

Creatine Supplements Everything You Need To Know

May 15, 2025 -

Ai Therapy Surveillance In A Police State

May 15, 2025

Ai Therapy Surveillance In A Police State

May 15, 2025 -

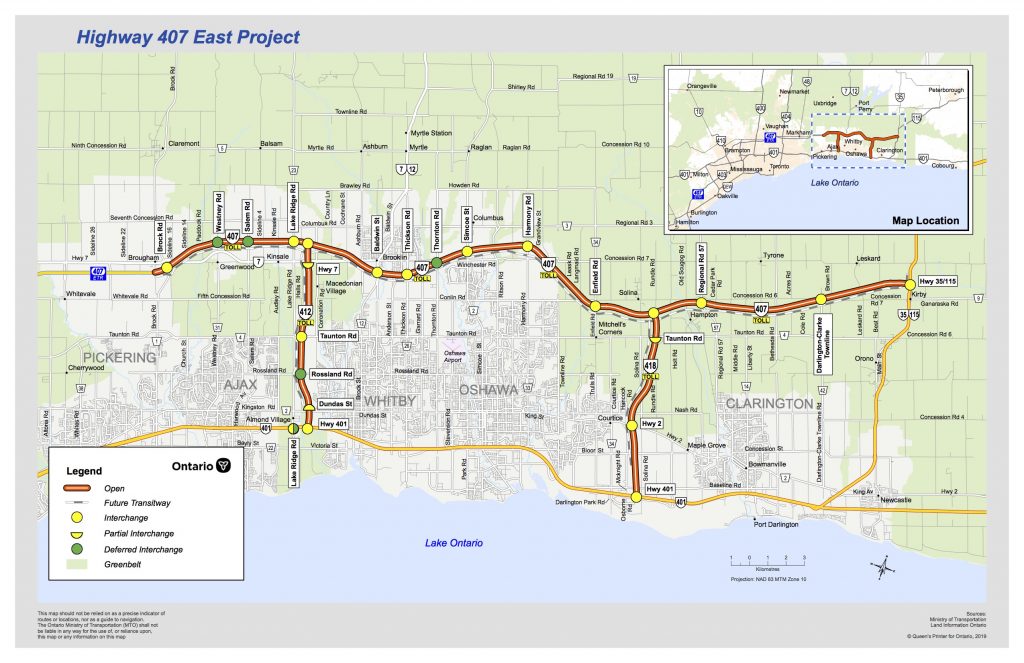

Highway 407 East Tolls And Ontario Gas Tax Potential For Permanent Changes

May 15, 2025

Highway 407 East Tolls And Ontario Gas Tax Potential For Permanent Changes

May 15, 2025 -

Shohei Ohtani Home Run Celebration Highlights Teamwork And Camaraderie

May 15, 2025

Shohei Ohtani Home Run Celebration Highlights Teamwork And Camaraderie

May 15, 2025 -

Padres Pregame Arraez And Heyward Lead Lineup In Sweep Bid

May 15, 2025

Padres Pregame Arraez And Heyward Lead Lineup In Sweep Bid

May 15, 2025

Latest Posts

-

Endgueltige Einigung Im Bvg Tarifstreit Keine Streiks Mehr

May 15, 2025

Endgueltige Einigung Im Bvg Tarifstreit Keine Streiks Mehr

May 15, 2025 -

Berlin Kind Von Betrunkenen Mit Antisemitischen Parolen Angegriffen

May 15, 2025

Berlin Kind Von Betrunkenen Mit Antisemitischen Parolen Angegriffen

May 15, 2025 -

S Bahn And Bvg Update Strike Resolved Service Recovery Underway

May 15, 2025

S Bahn And Bvg Update Strike Resolved Service Recovery Underway

May 15, 2025 -

Berlin Public Transport Bvg Strike Concludes S Bahn Delays Continue

May 15, 2025

Berlin Public Transport Bvg Strike Concludes S Bahn Delays Continue

May 15, 2025 -

Antisemitische Beleidigung Und Hitlerruf Kind In Berlin Von Unbekannten Angegriffen

May 15, 2025

Antisemitische Beleidigung Und Hitlerruf Kind In Berlin Von Unbekannten Angegriffen

May 15, 2025